I am working on a scene (easier to see on bl.ocks.org than below) in Three.js that would benefit greatly from using points as the rendering primitive, as there are ~200,000 quads to represent, and representing those quads with points would take 4 times fewer vertices, which means fewer draw calls and higher FPS.

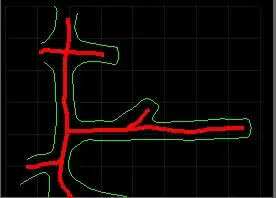

Now I'm trying to make the points larger as the camera gets closer to a given point. If you zoom straight in within the scene below, you should see this works fine. But if you drag the camera to the left or right, the points gradually grow smaller, despite the fact that the camera's proximity to the points seems constant. My intuition says the problem must be in the vertex shader, which sets gl_PointSize:

(function() {

/**

* Generate a scene object with a background color

**/

function getScene() {

var scene = new THREE.Scene();

scene.background = new THREE.Color(0xaaaaaa);

return scene;

}

/**

* Generate the camera to be used in the scene. Camera args:

* [0] field of view: identifies the portion of the scene

* visible at any time (in degrees)

* [1] aspect ratio: identifies the aspect ratio of the

* scene in width/height

* [2] near clipping plane: objects closer than the near

* clipping plane are culled from the scene

* [3] far clipping plane: objects farther than the far

* clipping plane are culled from the scene

**/

function getCamera() {

var aspectRatio = window.innerWidth / window.innerHeight;

var camera = new THREE.PerspectiveCamera(75, aspectRatio, 0.1, 10000);

camera.position.set(0, 1, -6000);

return camera;

}

/**

* Generate the renderer to be used in the scene

**/

function getRenderer() {

// Create the canvas with a renderer

var renderer = new THREE.WebGLRenderer({antialias: true});

// Add support for retina displays

renderer.setPixelRatio(window.devicePixelRatio);

// Specify the size of the canvas

renderer.setSize(window.innerWidth, window.innerHeight);

// Add the canvas to the DOM

document.body.appendChild(renderer.domElement);

return renderer;

}

/**

* Generate the controls to be used in the scene

* @param {obj} camera: the three.js camera for the scene

* @param {obj} renderer: the three.js renderer for the scene

**/

function getControls(camera, renderer) {

var controls = new THREE.TrackballControls(camera, renderer.domElement);

controls.zoomSpeed = 0.4;

controls.panSpeed = 0.4;

return controls;

}

/**

* Generate the points for the scene

* @param {obj} scene: the current scene object

**/

function addPoints(scene) {

// this geometry builds a blueprint and many copies of the blueprint

var geometry = new THREE.InstancedBufferGeometry();

geometry.addAttribute( 'position',

new THREE.BufferAttribute( new Float32Array( [0, 0, 0] ), 3));

// add data for each observation

var n = 10000; // number of observations

var translation = new Float32Array( n * 3 );

var translationIterator = 0;

for (var i=0; i<n*3; i++) {

switch (translationIterator % 3) {

case 0:

translation[translationIterator++] = ((i * 50) % 10000) - 5000;

break;

case 1:

translation[translationIterator++] = Math.floor((i / 160) * 50) - 5000;

break;

case 2:

translation[translationIterator++] = 10;

break;

}

}

geometry.addAttribute( 'translation',

new THREE.InstancedBufferAttribute( translation, 3, 1 ) );

var material = new THREE.RawShaderMaterial({

vertexShader: document.getElementById('vertex-shader').textContent,

fragmentShader: document.getElementById('fragment-shader').textContent,

});

var mesh = new THREE.Points(geometry, material);

mesh.frustumCulled = false; // prevent the mesh from being clipped on drag

scene.add(mesh);

}

/**

* Render!

**/

function render() {

requestAnimationFrame(render);

renderer.render(scene, camera);

controls.update();

};

/**

* Main

**/

var scene = getScene();

var camera = getCamera();

var renderer = getRenderer();

var controls = getControls(camera, renderer);

addPoints(scene);

render();

})()<html>

<head>

<style>

body { margin: 0; }

canvas { width: 100vw; height: 100vw; display: block; }

</style>

</head>

<body>

<script src='https://cdnjs.cloudflare.com/ajax/libs/three.js/88/three.min.js'></script>

<script src='https://rawgit.com/YaleDHLab/pix-plot/master/assets/js/trackball-controls.js'></script>

<script type='x-shader/x-vertex' id='vertex-shader'>

/**

* The vertex shader's main() function must define `gl_Position`,

* which describes the position of each vertex in screen coordinates.

*

* To do so, we can use the following variables defined by Three.js:

* attribute vec3 position - stores each vertex's position in world space

* attribute vec2 uv - sets each vertex's the texture coordinates

* uniform mat4 projectionMatrix - maps camera space into screen space

* uniform mat4 modelViewMatrix - combines:

* model matrix: maps a point's local coordinate space into world space

* view matrix: maps world space into camera space

*

* `attributes` can vary from vertex to vertex and are defined as arrays

* with length equal to the number of vertices. Each index in the array

* is an attribute for the corresponding vertex. Each attribute must

* contain n_vertices * n_components, where n_components is the length

* of the given datatype (e.g. for a vec2, n_components = 2; for a float,

* n_components = 1)

* `uniforms` are constant across all vertices

* `varyings` are values passed from the vertex to the fragment shader

*

* For the full list of uniforms defined by three, see:

* https://threejs.org/docs/#api/renderers/webgl/WebGLProgram

**/

// set float precision

precision mediump float;

// specify geometry uniforms

uniform mat4 modelViewMatrix;

uniform mat4 projectionMatrix;

// to get the camera attributes:

uniform vec3 cameraPosition;

// blueprint attributes

attribute vec3 position; // sets the blueprint's vertex positions

// instance attributes

attribute vec3 translation; // x y translation offsets for an instance

void main() {

// set point position

vec3 pos = position + translation;

vec4 projected = projectionMatrix * modelViewMatrix * vec4(pos, 1.0);

gl_Position = projected;

// use the delta between the point position and camera position to size point

float xDelta = pow(projected[0] - cameraPosition[0], 2.0);

float yDelta = pow(projected[1] - cameraPosition[1], 2.0);

float zDelta = pow(projected[2] - cameraPosition[2], 2.0);

float delta = pow(xDelta + yDelta + zDelta, 0.5);

gl_PointSize = 20000.0 / delta;

}

</script>

<script type='x-shader/x-fragment' id='fragment-shader'>

/**

* The fragment shader's main() function must define `gl_FragColor`,

* which describes the pixel color of each pixel on the screen.

*

* To do so, we can use uniforms passed into the shader and varyings

* passed from the vertex shader.

*

* Attempting to read a varying not generated by the vertex shader will

* throw a warning but won't prevent shader compiling.

**/

precision highp float;

void main() {

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

}

</script>

</body>

</html>Does anyone see what I'm missing? Any help others can offer would be greatly appreciated!