I know this has already been answered. I have a coded python solution for you.

Firstly I found this thread explaining how to remove white pixels.

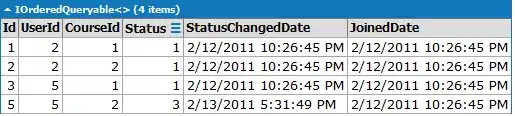

The Result:

Another Test img:

Edit

This is a way better and shorter method. I looked into it after @ZdaR commented on looping over an images matrix.

[Updated Code]

img = cv2.imread("Images/test.pnt")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 240, 255, cv2.THRESH_BINARY)

img[thresh == 255] = 0

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

erosion = cv2.erode(img, kernel, iterations = 1)

cv2.namedWindow('image', cv2.WINDOW_NORMAL)

cv2.imshow("image", erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

Source

[Old Code]

img = cv2.imread("Images/test.png")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 240, 255, cv2.THRESH_BINARY)

white_px = np.asarray([255, 255, 255])

black_px = np.asarray([0, 0, 0])

(row, col) = thresh.shape

img_array = np.array(img)

for r in range(row):

for c in range(col):

px = thresh[r][c]

if all(px == white_px):

img_array[r][c] = black_px

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

erosion = cv2.erode(img_array, kernel, iterations = 1)

cv2.namedWindow('image', cv2.WINDOW_NORMAL)

cv2.imshow("image", erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

Other Sources used:

OpenCV Morphological Transformations