I'm comparing the performance of two haskell programs running the same computation.

The first one is sequential:

main :: IO()

main = putStr $ unlines . map (show . solve) $ [100..107]

where solve x = pow x (10^7) (982451653)

The second one uses Control.Parallel.Strategies:

import Control.Parallel.Strategies

main :: IO()

main = putStr $ unlines . parMap rdeepseq (show . solve) $ [100..107]

where solve x = pow x (10^7) (982451653)

In both cases, pow is the modular exponentiation naively implemented as:

pow :: Int -> Int -> Int -> Int

pow a 0 m = 1

pow a b m = a * (pow a (b-1) m) `mod` m

The sequential program runs in about 3 seconds using, as expected, 100% CPU.

$ stack ghc seq.hs -- -O2

$ \time -f "%e s - %P" ./seq > /dev/null

2.96 s - 100%

The parallel program also runs in about 3 seconds using 100% CPU when limited to a single core.

$ stack ghc par.hs -- -O2 -threaded

$ \time -f "%e s - %P" ./par +RTS -N1 > /dev/null

3.14 s - 99%

But when I ran it on 4 cores, I did not observe the performance gain I was expected:

$ \time -f "%e s - %P" ./par +RTS -N4 > /dev/null

3.31 s - 235%

Even more surprising, the sequential program uses more than 100% CPU when run on several cores:

$ stack ghc seq.hs -- -O2 -threaded

$ \time -f "%e s - %P" ./seq +RTS -N4 > /dev/null

3.26 s - 232%

How can those results be explained?

EDIT - As advised by @RobertK and @Yuras, I replaced the rdeeseq by rpar and it did fix the initial issue. However, the performance is still much less than what I expected:

$ stack ghc par.hs -- -O2 -threaded

$ \time -f "%e s - %P" ./par +RTS -N1 > /dev/null

3.12 s - 99%

$ \time -f "%e s - %P" ./par +RTS -N4 > /dev/null

1.91 s - 368%

The execution time is barely divided by two even though the 4 cores are running more than 90% of the time on average.

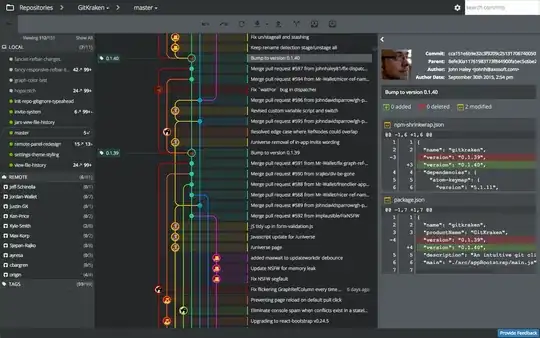

Also, some parts of the threadscope graph look very sequential: