I have a hdf file and want to extract data from it. For some reason i cant able to extract latitude and longitude values:

the code that i tried is :

from pyhdf import SD

hdf = SD.SD('MOD10C2.A2001033.006.2016092173057.hdf')

data = hdf.select('Eight_Day_CMG_Snow_Cover')

lat = (hdf.select('Latitude'))[:]

it gives me an error:

HDF4Error: select: non-existent dataset

I tried with:

lat = (hdf.select('Lat'))[:]

still does not help!

data can be found in this link

any help will be highly appreciated!

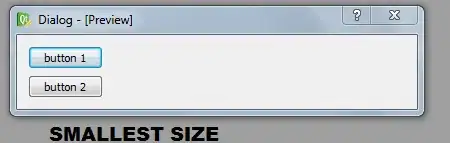

data format looks like:

and the error I got is:

---------------------------------------------------------------------------

HDF4Error Traceback (most recent call last)

~/anaconda3/lib/python3.6/site-packages/pyhdf/SD.py in select(self, name_or_index)

1635 try:

-> 1636 idx = self.nametoindex(name_or_index)

1637 except HDF4Error:

~/anaconda3/lib/python3.6/site-packages/pyhdf/SD.py in nametoindex(self, sds_name)

1528 sds_idx = _C.SDnametoindex(self._id, sds_name)

-> 1529 _checkErr('nametoindex', sds_idx, 'non existent SDS')

1530 return sds_idx

~/anaconda3/lib/python3.6/site-packages/pyhdf/error.py in _checkErr(procName, val, msg)

22 err = "%s : %s" % (procName, msg)

---> 23 raise HDF4Error(err)

HDF4Error: nametoindex : non existent SDS

During handling of the above exception, another exception occurred:

HDF4Error Traceback (most recent call last)

<ipython-input-11-21e6a4fdf8eb> in <module>()

----> 1 hdf.select('Lat')

~/anaconda3/lib/python3.6/site-packages/pyhdf/SD.py in select(self, name_or_index)

1636 idx = self.nametoindex(name_or_index)

1637 except HDF4Error:

-> 1638 raise HDF4Error("select: non-existent dataset")

1639 id = _C.SDselect(self._id, idx)

1640 _checkErr('select', id, "cannot execute")

HDF4Error: select: non-existent dataset