I'm working with a large pandas DataFrame of roughly 17million rows * 5 columns.

I'm then running a regression on a moving window within this DataFrame. I'm attempting to parallelize this computationally intensive part by passing the (static) DataFrame to multiple processes.

To simplify the example I'll assume I'm just working on the DataFrame 1000 times:

import multiprocessing as mp

def helper_func(input_tuple):

# Runs a regression on the DataFrame and outputs the

# results (coefficients)

...

if __name__ == '__main__':

# For simplicity's sake let's assume the input tuple is

# just a list of copies of the DataFrame

input_tuples = [df for x in range(1000)]

pl = mp.Pool(10)

jobs = pl.map_async(helper_func, list_of_input_tuples)

pl.close()

result = jobs.get()

While tracking the resource usage on repeated runs I'm noticing that the memory is constantly increasing and right before the process completes it tops out at 100%. Once it finishes it resets back to whatever it was prior to code run.

To give actual numbers, I can see the parent process using about 450mb while each of the workers is using around 1-2 GB of memory.

I'm worried (perhaps unnecessarily) that this could have memory related issues. Is there a way to reduce the memory that is held by the child processes? It is not clear to me why they are constantly increasing (and substantially larger than the parent process that held the DataFrame).

Edit: Have tried other workarounds such as setting maxtasksperchild (per High Memory Usage Using Python Multiprocessing without much success)

Edit2:

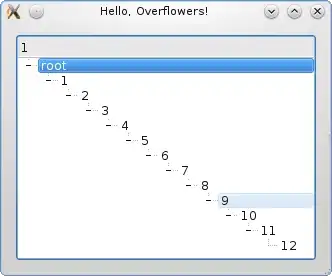

Example below of what memory usage looks like while running. There are small peaks and dips (where I assume memory is being released?) however it without fail reaches 100% at the very end of the code run.