We have a .NET 4.6.1 ASP.NET Web Forms app running on an Azure App Service. I say Web Forms, but the app also has Web Api 2 baked in, as well as two WebJob projects performing several tasks each.

We use StackExchange.Redis for caching. We also use Redis for SessionState. I mention this, because the problems began with a build up of Redis connections that would not die unless we restarted the ASP.NET app. We use the Lazy pattern for resharing the ConnectionMultiplexer. Local tests confirmed that the connection was indeed being shared within individual requests.

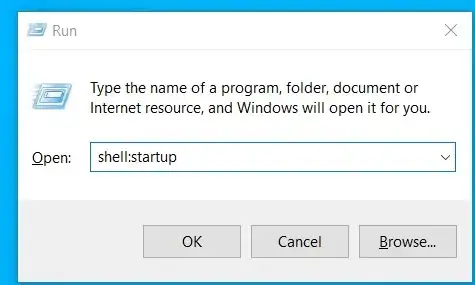

Reasonably confident with our Redis configuration, we started looking at metrics related to the ASP.NET app. The thread count mirrors our Redis client graphs. The thing is, I don't know what is normal when it comes to thread counts. But I would expect them to dispose/disappear/die over the course of a couple of days. Not build up.

We use Async/Await extensively, but we don't typically tackle threading directly. Last line may sound a little naive, sorry don't know how else to put it. We tend see our busiest times during the working day, with minimal activity over night.

We're at a loss, what are we doing wrong? Am I right, should we see the thread count drop with reduced activity on the site? Maybe I've already said something that you can point at and say "What the hell are you doing?", and that'd be great. What I'm really after though, is suggestions for what we might be responsible for this build up of threads and how we might manage it going forward.

Update 03/08

The thread chart in the second image, is being aggregated by SUM. If you change the aggregation to MIN, MAX, or AVG, you see a much more reasonable thread count that demonstrates threads being disposed of correctly. This is obviously a big relief for the web app.

I'm assuming, though my googling has not managed to confirm it, that the SUM indicates a total of threads created since the last app restart. If I'm correct in that assumption, the fact that the SUM of web app thread count matches the AVG Redis client count, confirms our original suspicion that not only is our Connection Multiplexer not sharing connections, they're not being closed off when the operation is finished.

This is our Redis class, which looks the same as the other 1000 examples on the interweb, at least as far as I can tell:

Imports System.Configuration

Imports StackExchange.Redis

Public Class RedisCache

Private Shared ReadOnly Property LazyConnection As New Lazy(Of ConnectionMultiplexer)(Function()

Dim cacheConnection = ConfigurationManager.AppSettings("CacheConnection")

Dim multiplexer = ConnectionMultiplexer.Connect(cacheConnection.ToString())

multiplexer.PreserveAsyncOrder = False

Return multiplexer

End Function)

Public Shared ReadOnly Property Connection As ConnectionMultiplexer

Get

Return LazyConnection.Value

End Get

End Property

Public Shared ReadOnly Property UseCache As Boolean

Get

Return ConfigurationManager.AppSettings("CacheConnection") IsNot Nothing

End Get

End Property

End Class

As I've already mentioned, we also use Redis for Session State, for which we have a very basic config:

<sessionState

mode="Custom"

customProvider="RedisSessionProvider"

<providers>

<add name="RedisSessionProvider"

type="Microsoft.Web.Redis.RedisSessionStateProvider"

port="6380"

host="***"

accessKey="***"

applicationName="***"

retryTimeoutInMilliseconds="5000"

ssl="true"/>

</providers>

</sessionState>

Does anyone have any ideas what is prolonging the life of these clients?

Update 03/08 Part Two

I've been thinking about how we can potentially narrow down the issue. Easiest place to start is with "is it Cache, Session or Redis as whole?" To that end, we're going to spin up an extra Redis server, which in the next release will have either the Cache or the Session State pointed at it. Hopefully one of those servers will exhibit the same behaviour that we're trying to fix, while the other behaves, umm, better. The former of the two would be where we focus our efforts.

One other little thing I've done, in our test environment, is to create a test property, that generates a new GUID, in the same way the Connection property works:

Private Shared ReadOnly Property LazyGuid As New Lazy(Of Guid)(Function() Guid.NewGuid())

Public Shared ReadOnly Property Guid As Guid

Get

Return LazyGuid.Value

End Get

End Property

I've then created a few calls from various parts of the web app, to the new GUID property, as well as to the existing Redis code:

<Route("guid"), HttpGet>

Public Async Function GetGuid() As Task(Of Tuple(Of String, String))

Return New Tuple(Of String, String)(RedisCache.Guid.ToString(), If(RedisCache.UseCache, RedisCache.Connection.ClientName, Nothing))

End Function

<Route("guid"), HttpPost>

Public Async Function PostForGuid() As Task(Of Tuple(Of String, String))

Return New Tuple(Of String, String)(RedisCache.Guid.ToString(), If(RedisCache.UseCache, RedisCache.Connection.ClientName, Nothing))

End Function

<Route("guid/sync"), HttpGet>

Public Function GetSyncGuid() As Tuple(Of String, String)

Return New Tuple(Of String, String)(RedisCache.Guid.ToString(), If(RedisCache.UseCache, RedisCache.Connection.ClientName, Nothing))

End Function

The examples above, along with some calls embedded in ASPX page, across multiple sessions (and time zones), yielded the same results. So at the moment I strongly suspect that the fault lies either with the RedisSessionStateProvider, or at least our use of it.

Update 13/08

Firstly, I've been running two instances of Redis on the test server for about a week. One taking Session requests, the other taking requests from the RedisCache class above. Both are sitting at a steady 15-20 connections.

The main point of this update though. I had the idea to run CLIENT LIST on the production Redis. With the client count sitting at around 1.3k, I managed to grab a sample of 850 client rows.

Out of the entire sample, there was one client that had a last command of GET, the rest were UNSUBSCRIBE or INFO. Ages range from 5000 seconds to 65,000 seconds. The idle times range from 0 to 60 seconds. I understand that the UNSUBSCRIBE commands relate to StackExchange.Redis' handling of pub/sub functionality which, to my knowledge, I'm not using.

Why are these clients staying active and multiplying??

id=1367825 addr=*** fd=45 name=*** age=465516 idle=56 flags=N db=0 sub=0 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=info numops=7680

id=1319911 addr=*** fd=611 name=*** age=489772 idle=48 flags=N db=0 sub=0 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=info numops=8082

id=1409149 addr=*** fd=477 name=*** age=444591 idle=34 flags=N db=0 sub=1 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=unsubscribe numops=7328

id=1319912 addr=*** fd=508 name=*** age=489772 idle=38 flags=N db=0 sub=1 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=unsubscribe numops=8072

id=2169495 addr=*** fd=954 name=*** age=59035 idle=56 flags=N db=0 sub=0 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=info numops=984

id=2169496 addr=*** fd=955 name=*** age=59035 idle=56 flags=N db=0 sub=0 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=info numops=984

id=1219863 addr=*** fd=557 name=*** age=540498 idle=38 flags=N db=0 sub=0 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=info numops=8917

id=1032642 addr=*** fd=594 name=*** age=635373 idle=56 flags=N db=0 sub=1 psub=0 multi=-1 qbuf=0 qbuf-free=0 obl=0 oll=0 omem=0 ow=0 owmem=0 events=r cmd=unsubscribe numops=10474

Update 14/08

A potentially positive development. I logged a ticket on Github. Sounds like a issue with our symptoms has been fixed in 2.0. Clients that never die