I've been taking bits and pieces of code from the internet and stackoverflow to get a simple camera app working. However, I've noticed that if I flip my phone to the sideway position, I see two problems:

1)the camera preview layer only takes up half the screen

2)and the camera's orientation doesn't seem to be changing; it stays fixed in the vertical position

My constraints seem to be fine, and if I look at various simulators' UIimageView(Camera's preview layer) in the hortionzal position, the UImage is strecthed properly. So not sure why the camera preview layer is only stretching to half the screen.

(ImagePreview = Camera preview layer)

As for the orientation problem, this seems to be a coding problem? I looked up some posts on stackoverflow, but I didn't see anything for Swift 4. Not sure if these is an easy way to do this in Swift 4.

iPhone AVFoundation camera orientation

Here is some of the code from my camera app:

import Foundation

import AVFoundation

import UIKit

class CameraSetup{

var captureSession = AVCaptureSession()

var frontCam : AVCaptureDevice?

var backCam : AVCaptureDevice?

var currentCam: AVCaptureDevice?

var captureInput: AVCaptureDeviceInput?

var captureOutput: AVCapturePhotoOutput?

var cameraPreviewLayer: AVCaptureVideoPreviewLayer?

func captureDevice()

{

let discoverySession = AVCaptureDevice.DiscoverySession(deviceTypes: [.builtInWideAngleCamera], mediaType: AVMediaType.video, position: .unspecified)

for device in discoverySession.devices{

if device.position == .front{

frontCam = device

}

else if device.position == .back{

backCam = device}

do {

try backCam?.lockForConfiguration()

backCam?.focusMode = .autoFocus

backCam?.exposureMode = .autoExpose

backCam?.unlockForConfiguration()

}

catch let error{

print(error)}

}

}

func configureCaptureInput(){

currentCam = backCam!

do{

captureInput = try AVCaptureDeviceInput(device: currentCam!)

if captureSession.canAddInput(captureInput!){

captureSession.addInput(captureInput!)

}

}

catch let error{

print(error)

}

}

func configureCaptureOutput(){

captureOutput = AVCapturePhotoOutput()

captureOutput!.setPreparedPhotoSettingsArray([AVCapturePhotoSettings(format: [AVVideoCodecKey : AVVideoCodecType.jpeg])], completionHandler: nil)

if captureSession.canAddOutput(captureOutput!){

captureSession.addOutput(captureOutput!)

}

captureSession.startRunning()

}

Here is the PreviewLayer function:

func configurePreviewLayer(view: UIView){

cameraPreviewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

cameraPreviewLayer?.videoGravity = AVLayerVideoGravity.resize;

cameraPreviewLayer?.zPosition = -1;

view.layer.insertSublayer(cameraPreviewLayer!, at: 0)

cameraPreviewLayer?.frame = view.bounds

}

EDIT:

As suggested I made the move the view.bounds line one line above:

func configurePreviewLayer(view: UIView){

cameraPreviewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

cameraPreviewLayer?.videoGravity = AVLayerVideoGravity.resize;

cameraPreviewLayer?.zPosition = -1;

cameraPreviewLayer?.frame = view.bounds

view.layer.insertSublayer(cameraPreviewLayer!, at: 0)

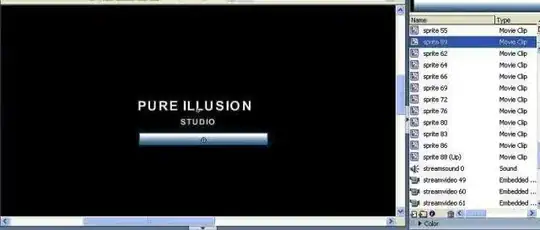

However, the problem still persists:

Here is the horizontal view: