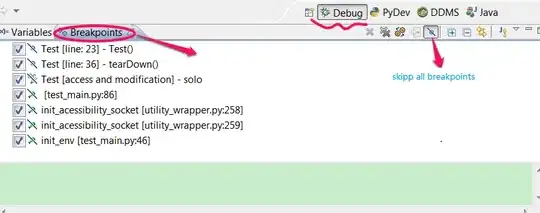

So I am building a Python script to download images from a list of urls. The script works to an extent. I don't want it to download images that have urls that don't exist. I take care of a few images with some usage of status code but still get bad images. I still get many images that I don't want. Like these:

Here is my code:

import os

import requests

import shutil

import random

import urllib.request

def sendRequest(url):

try:

page = requests.get(url, stream = True, timeout = 1)

except Exception:

print('error exception')

pass

else:

#HERE IS WHERE I DO THE STATUS CODE

print(page.status_code)

if (page.status_code == 200):

return page

return False

def downloadImage(imageUrl: str, filePath: str):

img = sendRequest(imageUrl)

if (img == False):

return False

with open(filePath, "wb") as f:

img.raw.decode_content = True

try:

shutil.copyfileobj(img.raw, f)

except Exception:

return False

return True

os.chdir('/Users/nikolasioannou/Desktop')

os.mkdir('folder')

fileURL = 'http://www.image-net.org/api/text/imagenet.synset.geturls?wnid=n04122825'

data = urllib.request.urlopen(fileURL)

output_directory = '/Users/nikolasioannou/Desktop/folder'

line_count = 0

for line in data:

img_name = str(random.randrange(0, 10000)) + '.jpg'

image_path = os.path.join(output_directory, img_name)

downloadImage(line.decode('utf-8'), image_path)

line_count = line_count + 1

#print(line_count)

Thanks for your time. Any ideas are appreciated.

Sincerely, Nikolas