Cloud functions are generally best-suited to perform just one (small) task. More often than not I come across people who want to do everything inside one cloud function. To be honest, this is also how I started developing cloud functions.

With this in mind, you should keep your cloud function code clean and small to perform just one task. Normally this would be a background task, a file or record that needs to be written somewhere, or a check that has to be performed. In this scenario, it doesn't really matter if there is a cold start penalty.

But nowadays, people, including myself, rely on cloud functions as a backend for API Gateway or Cloud Endpoints. In this scenario, the user goes to a website and the website sends a backend request to the cloud function to get some additional information. Now the cloud function acts as an API and a user is waiting for it.

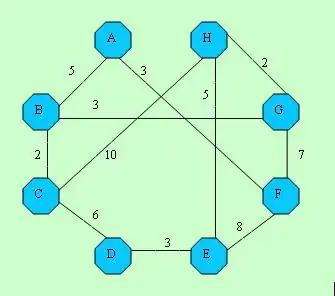

Typical cold cloud function:

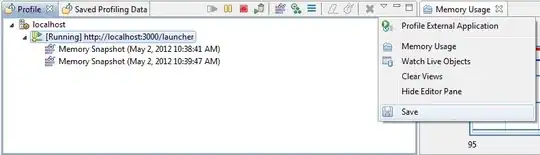

Typical warm cloud function:

There are several ways to cope with a cold-start problem:

- Reduce dependencies and amount of code. As I said before, cloud functions are best-suited for performing single tasks. This will reduce the overall package size that has to be loaded to a server between receiving a request and executing the code, thus speeding things up significantly.

- Another more hacky way is to schedule a cloud scheduler to periodically send a warmup request to your cloud function. GCP has a generous free tier, which allows for 3 schedulers and 2 million cloud function invocations (depending on resource usage). So, depending on the number of cloud functions, you could easily schedule an http-request every few seconds. For the sake of clarity, I placed a snippet below this post that deploys a cloud function and a scheduler that sends warmup requests.

If you think you have tweaked the cold-start problem, you could also take measures to speed up the actual runtime:

- I switched from Python to Golang, which gave me a double-digit performance increase in terms of actual runtime. Golang speed is comparable to Java or C++.

- Declare variables, especially GCP clients like storage, pub/sub etc., on a global level (source). This way, future invocations of your cloud function will reuse that objects.

- If you are performing multiple independent actions within a cloud function, you could make them asynchronous.

- And again, clean code and fewer dependencies also improve the runtime.

Snippet:

# Deploy function

gcloud functions deploy warm-function \

--runtime=go113 \

--entry-point=Function \

--trigger-http \

--project=${PROJECT_ID} \

--region=europe-west1 \

--timeout=5s \

--memory=128MB

# Set IAM bindings

gcloud functions add-iam-policy-binding warm-function \

--region=europe-west1 \

--member=serviceAccount:${PROJECT_ID}@appspot.gserviceaccount.com \

--role=roles/cloudfunctions.invoker

# Create scheduler

gcloud scheduler jobs create http warmup-job \

--schedule='*/5 * * * *' \

--uri='https://europe-west1-${PROJECT_ID}.cloudfunctions.net/warm-function' \

--project=${PROJECT_ID} \

--http-method=OPTIONS \

--oidc-service-account-email=${PROJECT_ID}@appspot.gserviceaccount.com \

--oidc-token-audience=https://europe-west1-${PROJECT_ID}.cloudfunctions.net/warm-function