here's the modified description of my problem:

I have a situation here that I've been trying to solve but have hit a wall and would like different ideas.

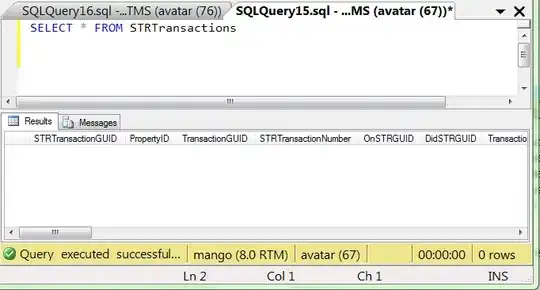

Scenario: data files are transferred from multiple stores (transactions) to the head office as they happen. for each store the table is a decent size. At the head office, that same table contains the same info from all stores which makes it huge. the data is transferred through files as there isn't any direct connections between the HO and the stores.

I'm trying to find a way to make sure that the data from each store has made it safe a sound to the head office table. To do this I have tried to do a checksum_AGG of a checksum for the fields that should match between the store and the HO... But the performance was poor. I've also tried the sum of specific numeric columns and do the same at host for a specific store to compare but the performance seems to be poor also.

I have verified the fragmentation of the indexes and all is good.

Ideally I would love to transfer all the data from the store line by line and compare it against the HO but it's unthinkable since it's way to big.

I'm looking for ideas that I can explore to see if performance is acceptable. The idea is to get some type of checksum of a table per date (store/Date) at a store and do the same operation at the HO... and then compare the 2 values... This means that I would only need to transfer a checksum value per store and date to the HO.

Any idea is appreciated.

Thank you