I am working on data preprocessing and want to compare the benefits of Data Standardization vs Normalization vs Robust Scaler practically.

In theory, the guidelines are:

Advantages:

- Standardization: scales features such that the distribution is centered around 0, with a standard deviation of 1.

- Normalization: shrinks the range such that the range is now between 0 and 1 (or -1 to 1 if there are negative values).

- Robust Scaler: similar to normalization but it instead uses the interquartile range, so that it is robust to outliers.

Disadvantages:

- Standardization: not good if the data is not normally distributed (i.e. no Gaussian Distribution).

- Normalization: get influenced heavily by outliers (i.e. extreme values).

- Robust Scaler: doesn't take the median into account and only focuses on the parts where the bulk data is.

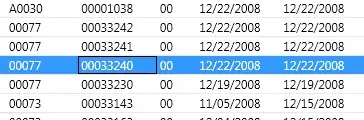

I created 20 random numerical inputs and tried the above-mentioned methods (numbers in red color represent the outliers):

I noticed that -indeed- the Normalization got affected negatively by the outliers and the change scale between the new values became tiny (all values almost identical -6 digits after the decimal point- 0.000000x) even there is noticeable differences between the original inputs!

My questions are:

- Am I right to say that also Standardization gets affected negatively by the extreme values as well? If not, why according to the result provided?

- I really can't see how the Robust Scaler improved the data because I still have extreme values in the resulted data set? Any simple complete interpretation?