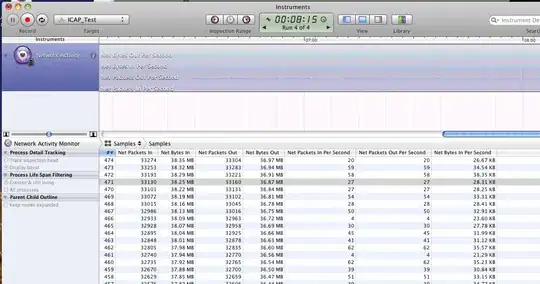

Update : I just rewrote the function in a new C source file on macOS:

#include <stdio.h>

int main() {

int x = 0xffffffff;

int m2 = (((((0x55 << 8) + 0x55 )<< 8) + 0x55)<< 8) + 0x55;

printf("m2 : 0x%x\n",m2);

int m4 = (((((0x33 << 8) + 0x33 )<< 8) + 0x33)<< 8) + 0x33;

printf("m4 : 0x%x\n",m4);

int m8 = (((((0x0f << 8) + 0x0f )<< 8) + 0x0f)<< 8) + 0x0f;

printf("m8 : 0x%x\n",m8);

int m16 = (0xff << 16) + 0xff ;

printf("m16 : 0x%x\n",m16);

int p1 = (x & m2) + ((x >> 1) & m2);

printf("p1: 0x%x\n",p1);

printf("p1 & m4 : 0x%x\n",p1 & m4);

printf("p1 >> 2 : 0x%x\n",p1 >> 2);

printf("(p1 >> 2) & m4 : 0x%x\n",(p1 >> 2) & m4);

int p2 = (p1 & m4) + ((p1 >> 2) & m4);

printf("p2 : 0x%x\n",p2);

int p3 = (p2 & m8) + ((p2 >> 4) & m8) ;

printf("p3 : 0x%x\n",p3);

int p4 = (p3 & m16) + ((p3 >> 8) & m16) ;

printf("p4 : 0x%x\n",p4);

//int p4 = p3 + (p3 >> 8) ;

int p5 = p4 + (p4 >> 16) ;

printf("BigCount result is : 0x%x\n",p5 & 0xFF);

}

everything is as same as the one in Ubuntu. This makes me more confused.

When I run this function in macOS 10.12, it gave an unexpected answer. The input x is 0xffffffff (-1).

The function is written in C language:

int bitCount(int x) {

int m2 = (((((0x55 << 8) + 0x55 )<< 8) + 0x55)<< 8) + 0x55;

int m4 = (((((0x33 << 8) + 0x33 )<< 8) + 0x33)<< 8) + 0x33;

int m8 = (((((0x0f << 8) + 0x0f )<< 8) + 0x0f)<< 8) + 0x0f;

int m16 = (0xff << 16) + 0xff ;

int p1 = (x & m2) + ((x >> 1) & m2);

int p2 = (p1 & m4) + ((p1 >> 2) & m4); //line 7

int p3 = (p2 & m8) + ((p2 >> 4) & m8) ;

int p4 = (p3 & m16) + ((p3 >> 8) & m16) ;

//int p4 = p3 + (p3 >> 8) ;

int p5 = p4 + (p4 >> 16) ;

return p5 & 0xFF;

}

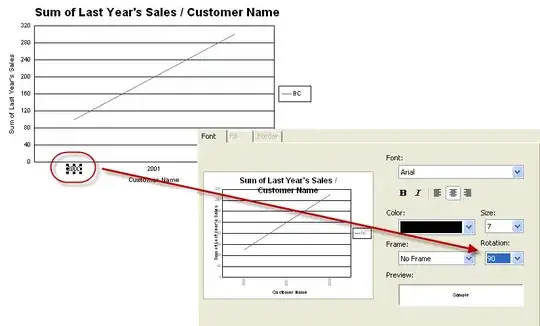

When I track all the local variable by print it, I just found that the:

((p1 >> 2) & m4) //line7

printed the value of '0x2222222'(seven '2' instead of eight '2').

This is so unexpected, the p1 prints '0x2aaaaaaa' and the m4 is '0x33333333', so it should be 0x22222222(eight '2;).

However, when I run this in ubuntu 16.04, everything is just as expected, for the ((p1 >> 2) & m4) prints '0x22222222':

Do you run this on your Mac having the same problem? Does anything different in macOS lead to this problem?