I have a native plugin in Unity that decodes H264 frames to YUV420p using FFMPEG.

To display the output image, I rearrange the YUV values into an RGBA texture and convert YUV to RGB using Unity shader (just to make it faster).

The following is the rearrangement code in my native plugin:

unsigned char* yStartLocation = (unsigned char*)m_pFrame->data[0];

unsigned char* uStartLocation = (unsigned char*)m_pFrame->data[1];

unsigned char* vStartLocation = (unsigned char*)m_pFrame->data[2];

for (int y = 0; y < height; y++)

{

for (int x = 0; x < width; x++)

{

unsigned char* y = yStartLocation + ((y * width) + x);

unsigned char* u = uStartLocation + ((y * (width / 4)) + (x / 2));

unsigned char* v = vStartLocation + ((y * (width / 4)) + (x / 2));

//REF: https://en.wikipedia.org/wiki/YUV

// Write the texture pixel

dst[0] = y[0]; //R

dst[1] = u[0]; //G

dst[2] = v[0]; //B

dst[3] = 255; //A

// To next pixel

dst += 4;

// dst is the pointer to target texture RGBA data

}

}

The shader that converts YUV to RGB works perfectly and I've used it in multiple projects.

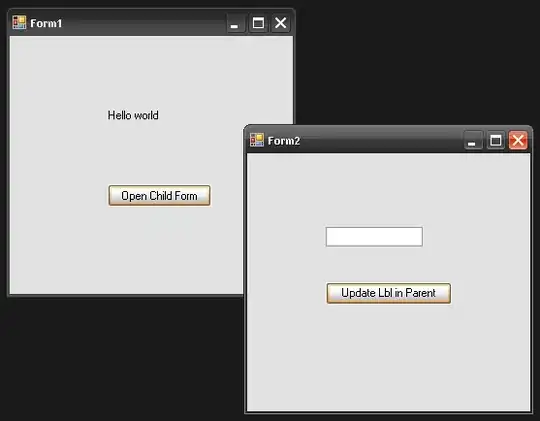

Now, I'm using the same code to decode on iOS platform. But for some reason the U and V values are now shifted:

Y Texture

U Texture

Is there anything that I'm missing for iOS or OpenGL specifically?

Any help greatly appreciated. Thank You!

Please note that I filled R=G=B = Y for the first screenshot and U for the second.(if that makes sense)

Edit:

Heres the output that I'm getting:

Edit2: Based on some research I think it may have something to do with Interlacing.

ref: Link

For now I've shifted to CPU based YUV-RGB conversion using sws_scale and it works fine.