Possible duplicate of This question with major parts picked from here. I've tried whatever solutions were provided there, they don't work for me.

Background

I'm capturing an image in YUV_420_888 image format returned from ARCore's frame.acquireCameraImage() method. Since I've set the camera configuration at 1920*1080 resolution, I need to scale it down to 224*224 to pass it to my tensorflow-lite implementation. I do that by using LibYuv library through the Android NDK.

Implementation

Prepare the image frames

//Figure out the source image dimensions

int y_size = srcWidth * srcHeight;

//Get dimensions of the desired output image

int out_size = destWidth * destHeight;

//Generate input frame

i420_input_frame.width = srcWidth;

i420_input_frame.height = srcHeight;

i420_input_frame.data = (uint8_t*) yuvArray;

i420_input_frame.y = i420_input_frame.data;

i420_input_frame.u = i420_input_frame.y + y_size;

i420_input_frame.v = i420_input_frame.u + (y_size / 4);

//Generate output frame

free(i420_output_frame.data);

i420_output_frame.width = destWidth;

i420_output_frame.height = destHeight;

i420_output_frame.data = new unsigned char[out_size * 3 / 2];

i420_output_frame.y = i420_output_frame.data;

i420_output_frame.u = i420_output_frame.y + out_size;

i420_output_frame.v = i420_output_frame.u + (out_size / 4);

I scale my image using Libyuv's I420Scale method

libyuv::FilterMode mode = libyuv::FilterModeEnum::kFilterBox;

jint result = libyuv::I420Scale(i420_input_frame.y, i420_input_frame.width,

i420_input_frame.u, i420_input_frame.width / 2,

i420_input_frame.v, i420_input_frame.width / 2,

i420_input_frame.width, i420_input_frame.height,

i420_output_frame.y, i420_output_frame.width,

i420_output_frame.u, i420_output_frame.width / 2,

i420_output_frame.v, i420_output_frame.width / 2,

i420_output_frame.width, i420_output_frame.height,

mode);

and return it to java

//Create a new byte array to return to the caller in Java

jbyteArray outputArray = env -> NewByteArray(out_size * 3 / 2);

env -> SetByteArrayRegion(outputArray, 0, out_size, (jbyte*) i420_output_frame.y);

env -> SetByteArrayRegion(outputArray, out_size, out_size / 4, (jbyte*) i420_output_frame.u);

env -> SetByteArrayRegion(outputArray, out_size + (out_size / 4), out_size / 4, (jbyte*) i420_output_frame.v);

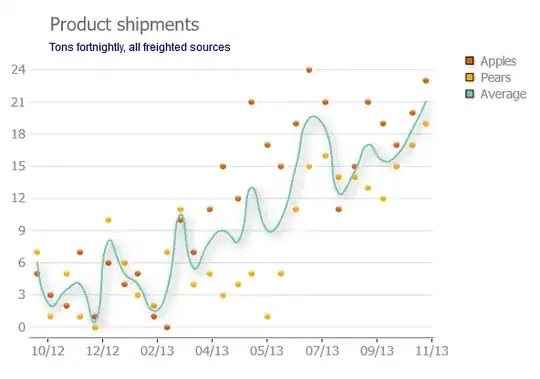

What it looks like post scaling :

What it looks like if I create an Image from the i420_input_frame without scaling :

Since the scaling messes up the colors big time, tensorflow fails to recognize objects properly. (It recognizes properly in their sample application) What am I doing wrong to mess up the colors big time?