Current OpenShift MongoDB version:

sh-4.2$ mongod --version

db version v3.2.10

git version: 79d9b3ab5ce20f51c272b4411202710a082d0317

OpenSSL version: OpenSSL 1.0.1e-fips 11 Feb 2013

allocator: tcmalloc

modules: none

build environment:

distarch: x86_64

target_arch: x86_64

I was told the latest version available on OpenShift was 3.6.

The steps available from OpenShift support are:

Navigate to the following:

Applications > Deployments > Actions drop down (on the right) and Edit.

From there modify the value for the version under Images / Image Stream Tag:

openshift / mongodb :3.6

Be aware that this will just upgrade the version of the MongoDB, you may still need to perform other tasks to upgrade your data to match which are outside the scope of support.

I'm a bit concerned about the possible "other tasks".

How can I upgrade MongoDB from 3.2.10 to 3.6 on OpenShift safely?

Edit

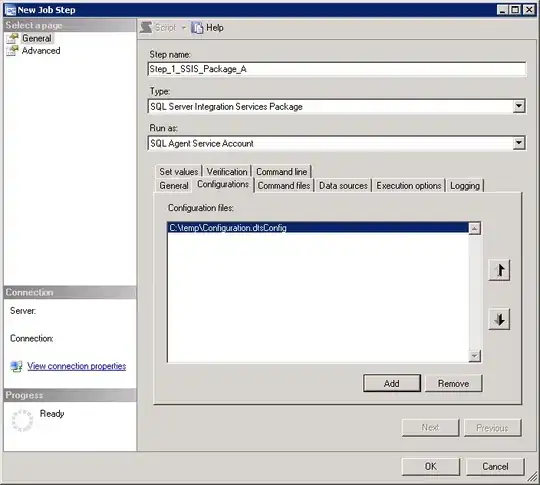

I tried just doing the steps listed and this was the result:

Frontend website displays:

{"name":"MongoError","message":"Topology was destroyed"}

And these are various shots from the console.

I googled Topology was destroyed and came across this answer:

https://stackoverflow.com/a/31950062

which, although it references Mongoose and I don't use that, spoke about Node freaking out when not being able to connect to MongoDB.

So I scaled down the Node pod, and then the MongoDB pod, and then scaled up the MongoDB pod, and then the Node pod.

It seems to have reverted back to the last working MongoDB deployment.

Then i click on Deploy for the MongoDB pod and it gets stuck again with the message shown in the first image above.

Edit 2

I tried scaling down both pods, and then deploying, I noticed this in the MongoDB logs:

2018-09-14T14:08:40.176+0000 F CONTROL [initandlisten] ** IMPORTANT: UPGRADE PROBLEM: The data files need to be fully upgraded to version 3.4 before attempting an upgrade to 3.6; see http://dochub.mongodb.org/core/3.6-upgrade-fcv for more details.

2018-09-14T14:08:40.176+0000 I NETWORK [initandlisten] shutdown: going to close listening sockets...

2018-09-14T14:08:40.176+0000 I NETWORK [initandlisten] removing socket file: /tmp/mongodb-27017.sock

2018-09-14T14:08:40.176+0000 I REPL [initandlisten] shutdown: removing all drop-pending collections...

2018-09-14T14:08:40.176+0000 I REPL [initandlisten] shutdown: removing checkpointTimestamp collection...

2018-09-14T14:08:40.176+0000 I STORAGE [initandlisten] WiredTigerKVEngine shutting down

=> Waiting for MongoDB daemon up

2018-09-14T14:08:40.416+0000 I STORAGE [initandlisten] WiredTiger message [1536934120:416170][26:0x7f20e10adb80], txn-recover: Main recovery loop: starting at 61/3200

2018-09-14T14:08:40.529+0000 I STORAGE [initandlisten] WiredTiger message [1536934120:529090][26:0x7f20e10adb80], txn-recover: Recovering log 61 through 62

2018-09-14T14:08:40.593+0000 I STORAGE [initandlisten] WiredTiger message [1536934120:593166][26:0x7f20e10adb80], txn-recover: Recovering log 62 through 62

2018-09-14T14:08:40.684+0000 I STORAGE [initandlisten] shutdown: removing fs lock...

2018-09-14T14:08:40.684+0000 I CONTROL [initandlisten] now exiting

2018-09-14T14:08:40.684+0000 I CONTROL [initandlisten] shutting down with code:62

=> Waiting for MongoDB daemon up

Edit 3

I have made some progress, i took these steps:

- Scale down MongoDB and Node pods.

- Set MongoDB version to 3.4

- Click

Deploy

The pod gets up and running, but then when i try and do the same with 3.6, it shows the error message in the logs about The data files need to be fully upgraded to version 3.4 before attempting an upgrade to 3.6.

Edit 4

Re:

https://docs.mongodb.com/manual/release-notes/3.6-upgrade-standalone/#upgrade-version-path

I ran this in the pod terminal:

env | grep MONGODB // to confirm i had admin password correct

mongo -u admin -p ************ admin

db.adminCommand( { getParameter: 1, featureCompatibilityVersion: 1 } )

and it returned:

{ "featureCompatibilityVersion" : "3.2", "ok" : 1 }

So I did:

> db.adminCommand( { setFeatureCompatibilityVersion: "3.4" } )

{ "ok" : 1 }

> db.adminCommand( { getParameter: 1, featureCompatibilityVersion: 1 } )

{ "featureCompatibilityVersion" : "3.4", "ok" : 1 }

Then I ensured all pods were scaled down and changed MongoDB version from 3.4 to 3.6, deployed, and tried to scale the MongoDB pod back up.

And it came back up!

Edit 5

I went back into terminal and ran:

> db.adminCommand( { setFeatureCompatibilityVersion: "3.6" } )

{ "ok" : 1 }

because i got this error in browser dev tools console:

The featureCompatibilityVersion must be 3.6 to use arrayFilters. See http://dochub.mongodb.org/core/3.6-feature-compatibility.

And the functionality is working on the front end now!