Now I'm working on a space environment model that predicts the maximum Kp index of tomorrow using last 3-days coronal hole information.

(Total amount of data is around 4300 days.)

For the input, 3 arrays with 136 elements are used (one array for a day, so 3 days data). For example,

inputArray_day1 = [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

inputArray_day2 = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0]

inputArray_day3 = [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

The output is single one-hot vector of length 28 which indicates maximum Kp index of day4. I use dictionaries below to convert between Kp index and one-hot vector easily.

kp2idx = {0.0:0, 0.3:1, 0.7:2, 1.0:3, 1.3:4, 1.7:5, 2.0:6, 2.3:7, 2.7:8, 3.0:9, 3.3:10, 3.7:11, 4.0:12, 4.3:13,

4.7:14, 5.0:15, 5.3:16, 5.7:17, 6.0:18, 6.3:19, 6.7:20, 7.0:21, 7.3:22, 7.7:23, 8.0:24, 8.3:25, 8.7:26, 9.0:27}

idx2kp = {0:0.0, 1:0.3, 2:0.7, 3:1.0, 4:1.3, 5:1.7, 6:2.0, 7:2.3, 8:2.7, 9:3.0, 10:3.3, 11:3.7, 12:4.0, 13:4.3,

14:4.7, 15:5.0, 16:5.3, 17:5.7, 18:6.0, 19:6.3, 20:6.7, 21:7.0, 22:7.3, 23:7.7, 24:8.0, 25:8.3, 26:8.7, 27:9.0}

The model contains two LSTM layers with dropout.

def fit_lstm2(X,Y,Xv,Yv, n_batch, nb_epoch, n_neu1, n_neu2, dropout):

model = tf.keras.Sequential()

model.add(tf.keras.layers.LSTM(n_neu1, batch_input_shape = (n_batch,X.shape[1],X.shape[2]), return_sequences=True))

model.add(tf.keras.layers.Dropout(dropout))

model.add(tf.keras.layers.LSTM(n_neu2))

model.add(tf.keras.layers.Dropout(dropout))

model.add(tf.keras.layers.Dense(28,activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='Adam', metrics=['accuracy','mse'])

for i in range(nb_epoch):

print('epochs : ' + str(i))

model.fit(X,Y, epochs=1, batch_size = n_batch, verbose=1, shuffle=False,callbacks=[custom_hist], validation_data = (Xv,Yv))

model.reset_states()

return model

I tried various neuron number and dropout rate such as

n_batch = 1

nb_epochs = 100

n_neu1 = [128,64,32,16]

n_neu2 = [64,32,16,8]

n_dropout = [0.2,0.4,0.6,0.8]

for dropout in n_dropout:

for i in range(len(n_neu1)):

model = fit_lstm2(x_train,y_train,x_val,y_val,n_batch, nb_epochs,n_neu1[i],n_neu2[i],dropout)

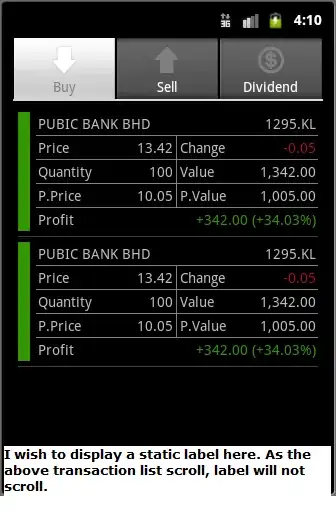

The problem is that the prediction accuracy never goes up more than 10% and over-fitting starts pretty soon after intializing training.

Here are some images of the training histories. (Sorry for the location of the legends)

Honestly, I have no idea why the validation accuracy never goes up and the over-fitting starts so quickly.. Is there better way to use the input data? I mean, should I normalize or standardize the input?

Please help me, any comments and suggestions will be greatly appreciated.