how do I invert the stationarity and reapply the dates to the data for plotting?

srcs:

- https://nbviewer.jupyter.org/github/robbiemu/location-metric-data/blob/master/appData%20and%20locationData.ipynb

- https://github.com/robbiemu/location-metric-data

I am trying to invert stationarity and get a plot of prediction, particularly for two columns called ' app_1', and ' app_2, (the orange and red lines below).

The data I am drawing from looks like this:

print(u1.info())

u1.head()

<class 'pandas.core.frame.DataFrame'>

DatetimeIndex: 15011 entries, 2017-08-28 11:00:00 to 2018-01-31 19:30:00

Freq: 15T

Data columns (total 10 columns):

app_1 15011 non-null float64

app_2 15011 non-null float64

user 15011 non-null object

bar 15011 non-null float64

grocers 15011 non-null float64

home 15011 non-null float64

lunch 15011 non-null float64

park 15011 non-null float64

relatives 15011 non-null float64

work 15011 non-null float64

dtypes: float64(9), object(1)

memory usage: 1.3+ MB

app_1 app_2 user bar grocers home lunch park relatives work

date

2017-08-28 11:00:00 0.010000 0.0 user_1 0.0 0.0 0.0 0.0 0.0 0.0 0.0

2017-08-28 11:15:00 0.010125 0.0 user_1 0.0 0.0 0.0 0.0 0.0 0.0 0.0

2017-08-28 11:30:00 0.010250 0.0 user_1 0.0 0.0 0.0 0.0 0.0 0.0 0.0

2017-08-28 11:45:00 0.010375 0.0 user_1 0.0 0.0 0.0 0.0 0.0 0.0 0.0

2017-08-28 12:00:00 0.010500 0.0 user_1 0.0 0.0 0.0 0.0 0.0 0.0 0.0

the location column represent a location the user is at at a given time -- after the first "significant location change" event, one and only one column will be a 1 at a time.

I am analyzing this with VARIMAX -- using statsmodels VARMAX version of AR.:

from statsmodels.tsa.statespace.varmax import VARMAX

import pandas as pd

import numpy as np

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

from random import random

#...

columns = [ ' app_1', ' app_2', ' bar', ' grocers', ' home', ' lunch', ' work', ' park', ' relatives' ]

series = u1[columns]

# from: https://machinelearningmastery.com/make-predictions-time-series-forecasting-python/

# create a difference transform of the dataset

def difference(dataset):

diff = list()

for i in range(1, len(dataset)):

value = dataset[i] - dataset[i - 1]

diff.append(value)

return np.array(diff)

# Make a prediction give regression coefficients and lag obs

def predict(coef, history):

yhat = coef[0]

for i in range(1, len(coef)):

yhat += coef[i] * history[-i]

return yhat

X = pd.DataFrame()

for column in columns:

X[column] = difference(series[column].values)

size = (4*24)*54 # hoping

train, test = X[0:size], X[size:size+(14*4*24)]

train = train.loc[:, (train != train.iloc[0]).any()] # https://stackoverflow.com/questions/20209600/panda-dataframe-remove-constant-column

test = test.loc[:, (test != test.iloc[0]).any()] # https://stackoverflow.com/questions/20209600/panda-dataframe-remove-constant-column

#print(train.var(), X.info())

# train autoregression

model = VARMAX(train)

model_fit = model.fit(method='powell', disp=False)

#print(model_fit.mle_retvals)

##window = model_fit.k_ar

coef = model_fit.params

# walk forward over time steps in test

history = [train.iloc[i] for i in range(len(train))]

predictions = list()

for t in range(len(test)):

yhat = predict(coef, history)

obs = test.iloc[t]

predictions.append(yhat)

history.append(obs)

print(mean_squared_error(test, predictions))

0.5594208989876831

That mean_squared_error from scikitlearn is not horrifying (its roughly the middle of the three samples shown in the documentation, in fact). That _could mean that the data is predictive. I'd like to see that in a plot.

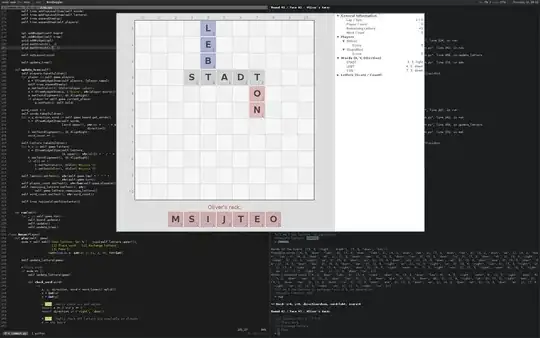

# plot

plt.plot(test)

plt.plot(predictions, color='red')

plt.show()

So, part of what is going on here is that the data is seasonal, so it had to have stationarity applied to it. Now the lines are all vertical, instead of temporal.

But another thing that concerns me is the scale of the red data. That's a lot of red. Anyway, how do I invert the stationarity and reapply the dates to the data for plotting? It obviously should not look like that. :)