I've created a database with the requirements and I've tested it.

From the "timing" point of view really there is no difference but maybe it's because my testing sandbox environment.

Anyway I've "explained" these tree queries :

1- select * from users where id in (1,2,3,4,5,6,7,8,9,10,..3000)

cost: "Index Scan using users_pkey on users (cost=4.04..1274.75 rows=3000 width=11)"" Index Cond: (id = ANY ('{1,2,3,4,5,6,7,8,9,10 (...)"

2- SELECT * FROM users AS u WHERE EXISTS (SELECT 1 FROM lookuptable A-- l WHERE u.id = l.id); <- Note that i've removed the 'distinct', it's useless.

cost: "Merge Semi Join (cost=103.22..364.35 rows=3000 width=11)"

" Merge Cond: (u.id = l.id)"

" -> Index Scan using users_pkey on users u (cost=0.29..952.68 rows=30026 width=11)"

" -> Index Scan using users_pkey on users u (cost=0.29..952.68 rows=30026 width=11)"

3- Select * from users where id IN (select id from lookuptable)

"Merge Semi Join (cost=103.22..364.35 rows=3000 width=11)"

" Merge Cond: (users.id = lookuptable.id)"

" -> Index Scan using users_pkey on users (cost=0.29..952.68 rows=30026 width=11)"

" -> Index Only Scan using lookuptable_pkey on lookuptable (cost=0.28..121.28 rows=3000 width=4)"

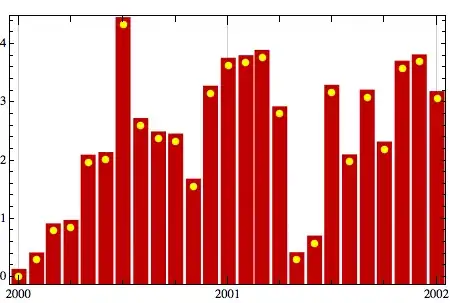

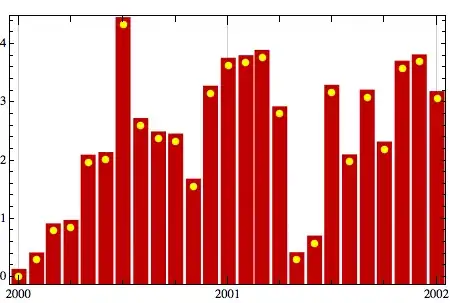

The explain graphic of the last two queries:

Anyway as I've read from some comments above, you have also to add to the costs of the queries the costs of populating the lookuptable..

and also the fact that you have to split the "querying" into different executions which may cause "transactional problems".

I'll use the first query.