Problem: I have records with a start and end date for an intervention and I want to merge the rows according to the following rule:

For each ID, any intervention that begins within one year of the last intervention ending, merge the rows so that the start_date is the earliest start date of the two rows, and the end_date is the latest end_date of the two rows. I also want to keep track of intervention IDs if they are merged.

There can be five scenarios:

- Two rows have the same start date, but different end dates.

Start date....End date

Start date.........End date

- The period between row 2's start and end date lies within the period of row 1's start and end date.

Start date...................End date

.......Start date...End date

- Row 2's intervention starts within Row 1's intervention period but ends later.

Start date.....End date

.....Start date.............End date

- Row 2 starts within one year of the end of Row 1.

Start date....End date

......................|....<= 1 year....|Start date...End date

- Row 2 starts over one year after the end of Row 1.

Start date...End date

.....................|........ > 1 year..........|Start date...End date

I want to merge rows in cases 1 to 4 but not 5.

Data:

library(data.table)

sample_data <- data.table(id = c(rep(11, 3), rep(21, 4)),

start_date = as.Date(c("2013-01-01", "2013-01-01", "2013-02-01", "2013-01-01", "2013-02-01", "2013-12-01", "2015-06-01")),

end_date = as.Date(c("2013-06-01", "2013-07-01", "2013-05-01", "2013-07-01", "2013-09-01", "2014-01-01", "2015-12-01")),

intervention_id = as.character(1:7),

all_ids = as.character(1:7))

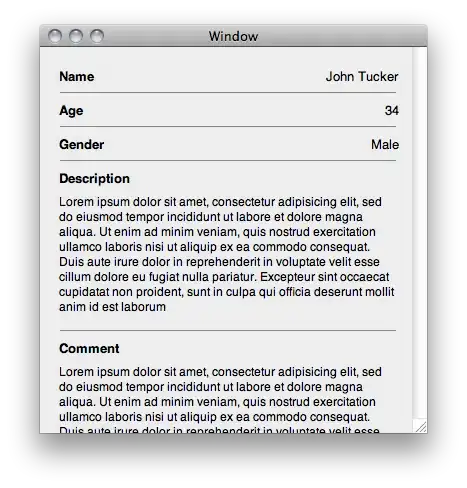

> sample_data

id start_date end_date intervention_id all_ids

1: 11 2013-01-01 2013-06-01 1 1

2: 11 2013-01-01 2013-07-01 2 2

3: 11 2013-02-01 2013-05-01 3 3

4: 21 2013-01-01 2013-07-01 4 4

5: 21 2013-02-01 2013-09-01 5 5

6: 21 2013-12-01 2014-01-01 6 6

7: 21 2015-06-01 2015-12-01 7 7

The final result should look like:

> merged_data

id start_date end_date intervention_id all_ids

1: 11 2013-01-01 2013-07-01 1 1, 2, 3

2: 21 2013-01-01 2014-01-01 4 4, 5, 6

3: 21 2015-06-01 2015-12-01 7 7

I'm not sure if the all_ids column is the best way to keep track of the intervention_id's so open to ideas for that. (The intervention_id's don't need to be in order in the all_ids column.)

It doesn't matter what the value of the intervention_id column is where rows have been merged.

What I tried:

I started off by writing a function to deal with only those cases where the start date is the same. It's a very non-R, non-data.table way of doing it and therefore very inefficient.

mergestart <- function(unmerged) {

n <- nrow(unmerged)

mini_merged <- data.table(id = double(n),

start_date = as.Date(NA),

end_date = as.Date(NA),

intervention_id = character(n),

all_ids = character(n))

merge_a <- function(unmerged, un_i, merged, m_i, no_of_records) {

merged[m_i] <- unmerged[un_i]

un_i <- un_i + 1

while (un_i <= no_of_records) {

if(merged[m_i]$start_date == unmerged[un_i]$start_date) {

merged[m_i]$end_date <- max(merged[m_i]$end_date, unmerged[un_i]$end_date)

merged[m_i]$all_ids <- paste0(merged[m_i]$all_ids, ",", unmerged[un_i]$intervention_id)

un_i <- un_i + 1

} else {

m_i <- m_i + 1

merged[m_i] <- unmerged[un_i]

un_i <- un_i + 1

merge_a(unmerged, un_i, merged, m_i, (no_of_records - un_i))

}

}

return(merged)

}

mini_merged <- merge_a(unmerged, 1, mini_merged, 1, n)

return(copy(mini_merged[id != 0]))

}

Using this function on just one id gives:

> mergestart(sample_data[id == 11])

id start_date end_date intervention_id all_ids

1: 11 2013-01-01 2013-07-01 1 1,2

2: 11 2013-02-01 2013-05-01 3 3

To use the function on the whole dataset:

n <- nrow(sample_data)

all_merged <- data.table(id = double(n),

start_date = as.Date(NA),

end_date = as.Date(NA),

intervention_id = character(n),

all_ids = character(n))

start_i <- 1

for (i in unique(sample_data$id)) {

id_merged <- mergestart(sample_data[id == i])

end_i <- start_i + nrow(id_merged) - 1

all_merged[start_i:end_i] <- copy(id_merged)

start_i <- end_i

}

all_merged <- all_merged[id != 0]

> all_merged

id start_date end_date intervention_id all_ids

1: 11 2013-01-01 2013-07-01 1 1,2

2: 21 2013-01-01 2013-07-01 4 4

3: 21 2013-02-01 2013-09-01 5 5

4: 21 2013-12-01 2014-01-01 6 6

5: 21 2015-06-01 2015-12-01 7 7

I also had a look at rolling joins but still can't get how to use it in this situation.

This answer https://stackoverflow.com/a/48747399/6170115 looked promising but I don't know how to integrate all the other conditions and track the intervention IDs with this method.

Can anyone point me in the right direction?