I know how to calculate the Euclidean distance between points in an array using scipy.spatial.distance.cdist

Similar to answers to this question: Calculate Distances Between One Point in Matrix From All Other Points

However, I would like to make the calculation assuming cyclic boundary conditions, e.g. so that point [0,0] is distance 1 from point [0,n-1] in this case, not a distance of n-1. (I will then make a mask for all points within a threshold distance of my target cells, but that is not central to the question).

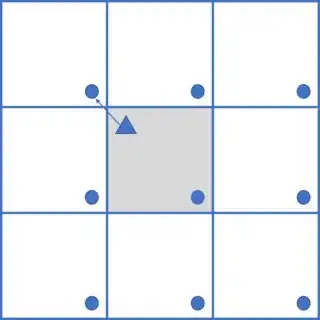

The only way I can think of is to repeat the calculation 9 times, with the domain indices having n added/subtracted in the x, y and then x&y directions, and then stacking the results and finding the minimum across the 9 slices. To illustrate the need for 9 repetitions, I put together a simple schematic with just 1 J-point, marked with a circle, and which shows an example where the cell marked by the triangle in this case has its nearest neighbour in the domain reflected to the top-left.

this is the code I developed for this using cdist:

import numpy as np

from scipy import spatial

n=5 # size of 2D box (n X n points)

np.random.seed(1) # to make reproducible

a=np.random.uniform(size=(n,n))

i=np.argwhere(a>-1) # all points, for each loc we want distance to nearest J

j=np.argwhere(a>0.85) # set of J locations to find distance to.

# this will be used in the KDtree soln

global maxdist

maxdist=2.0

def dist_v1(i,j):

dist=[]

# 3x3 search required for periodic boundaries.

for xoff in [-n,0,n]:

for yoff in [-n,0,n]:

jo=j.copy()

jo[:,0]-=xoff

jo[:,1]-=yoff

dist.append(np.amin(spatial.distance.cdist(i,jo,metric='euclidean'),1))

dist=np.amin(np.stack(dist),0).reshape([n,n])

return(dist)

This works, and produces e.g. :

print(dist_v1(i,j))

[[1.41421356 1. 1.41421356 1.41421356 1. ]

[2.23606798 2. 1.41421356 1. 1.41421356]

[2. 2. 1. 0. 1. ]

[1.41421356 1. 1.41421356 1. 1. ]

[1. 0. 1. 1. 0. ]]

The zeros obviously mark the J points, and the distances are correct (this EDIT corrects my earlier attempts which was incorrect).

Note that if you change the last two lines to stack the raw distances and then only use one minimum like this :

def dist_v2(i,j):

dist=[]

# 3x3 search required for periodic boundaries.

for xoff in [-n,0,n]:

for yoff in [-n,0,n]:

jo=j.copy()

jo[:,0]-=xoff

jo[:,1]-=yoff

dist.append(spatial.distance.cdist(i,jo,metric='euclidean'))

dist=np.amin(np.dstack(dist),(1,2)).reshape([n,n])

return(dist)

it is faster for small n (<10) but considerably slower for larger arrays (n>10)

...but either way, it is slow for my large arrays (N=500 and J points number around 70), this search is taking up about 99% of the calculation time, (and it is a bit ugly too using the loops) - is there a better/faster way?

The other options I thought of were:

- scipy.spatial.KDTree.query_ball_point

With further searching I have found that there is a function scipy.spatial.KDTree.query_ball_point which directly calculates the coordinates within a radius of my J-points, but it doesn't seem to have any facility to use periodic boundaries, so I presume one would still need to somehow use a 3x3 loop, stack and then use amin as I do above, so I'm not sure if this will be any faster.

I coded up a solution using this function WITHOUT worrying about the periodic boundary conditions (i.e. this doesn't answer my question)

def dist_v3(n,j):

x, y = np.mgrid[0:n, 0:n]

points = np.c_[x.ravel(), y.ravel()]

tree=spatial.KDTree(points)

mask=np.zeros([n,n])

for results in tree.query_ball_point((j), maxdist):

mask[points[results][:,0],points[results][:,1]]=1

return(mask)

Maybe I'm not using it in the most efficient way, but this is already as slow as my cdist-based solutions even without the periodic boundaries. Including the mask function in the two cdist solutions, i.e. replacing the return(dist) with return(np.where(dist<=maxdist,1,0)) in those functions, and then using timeit, I get the following timings for n=100:

from timeit import timeit

print("cdist v1:",timeit(lambda: dist_v1(i,j), number=3)*100)

print("cdist v2:",timeit(lambda: dist_v2(i,j), number=3)*100)

print("KDtree:", timeit(lambda: dist_v3(n,j), number=3)*100)

cdist v1: 181.80927299981704

cdist v2: 554.8205785999016

KDtree: 605.119637199823

Make an array of relative coordinates for points within a set distance of [0,0] and then manually loop over the J points setting up a mask with this list of relative points - This has the advantage that the "relative distance" calculation is only performed once (my J points change each timestep), but I suspect the looping will be very slow.

Precalculate a set of masks for EVERY point in the 2D domain, so in each timestep of the model integration I just pick out the mask for the J-point and apply. This would use a LOT of memory (proportional to n^4) and perhaps is still slow as you need to loop over J points to combine the masks.