In the project, I'm using Unity3D's C# scripts and C++ via DllImports. My goal is that the game scene has 2 cubes (Cube & Cube2) which one texture of cube show the live video through my laptop camera and Unity's webCamTexture.Play() and the other one texture of cube show the processed video by external C++ function ProcessImage().

Code Context:

In c++, I define that

struct Color32

{

unsigned char r;

unsigned char g;

unsigned char b;

unsigned char a;

};

and the function is

extern "C"

{

Color32* ProcessImage(Color32* raw, int width, int height);

}

...

Color32* ProcessImage(Color32* raw, int width, int height)

{

for(int i=0; i<width*height ;i++)

{

raw[i].r = raw[i].r-2;

raw[i].g = raw[i].g-2;

raw[i].b = raw[i].b-2;

raw[i].a = raw[i].a-2;

}

return raw;

}

C#:

Declare and importing

public GameObject cube;

public GameObject cube2;

private Texture2D tx2D;

private WebCamTexture webCamTexture;

[DllImport("test22")] /*the name of Plugin is test22*/

private static extern Color32[] ProcessImage(Color32[] rawImg,

int width, int height);

Get camera situation and set cube1, cube2 Texture

void Start()

{

WebCamDevice[] wcd = WebCamTexture.devices;

if(wcd.Length==0)

{

print("Cannot find a camera");

Application.Quit();

}

else

{

webCamTexture = new WebCamTexture(wcd[0].name);

cube.GetComponent<Renderer>().material.mainTexture = webCamTexture;

tx2D = new Texture2D(webCamTexture.width, webCamTexture.height);

cube2.GetComponent<Renderer>().material.mainTexture = tx2D;

webCamTexture.Play();

}

}

Send data to external C++ function by DllImports and receive processed data using Color32[] a. Finally, I'm using Unity's SetPixels32 to setup tx2D(Cube2) texture:

void Update()

{

Color32[] rawImg = webCamTexture.GetPixels32();

System.Array.Reverse(rawImg);

Debug.Log("Test1");

Color32[] a = ProcessImage(rawImg, webCamTexture.width, webCamTexture.height);

Debug.Log("Test2");

tx2D.SetPixels32(a);

tx2D.Apply();

}

Results:

The result is just the texture of cube 1 show the live video and fail to show processed data using the texture of cube 2.

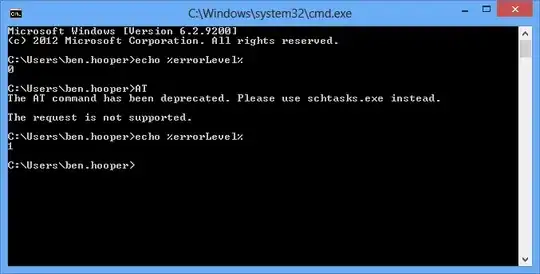

Error:

SetPixels32 called with invalid number of pixels in the array UnityEngine.Texture2D:SetPixels32(Color32[]) Webcam:Update() (at Assets/Scripts/Webcam.cs:45)

I don't understand that why the invalid number of pixels in the array when I input array a to SetPixels32

Any ideas?

UPDATE(10 Oct. 2018)

Thanks to @Programmer, now it can work by pin memory.

Btw, I find some little problem which is about Unity Engine. When the Unity Camera run between 0 to 1 second, webCamTexture.width or webCamTexture.height always return 16x16 size even requested bigger image such as 1280x720 and then it will return correct size after 1 second. (Possibly several frames) So, I reference this post and delay 2 seconds to run Process() in Update() function and reset the Texture2D size in Process() function. It will work fine:

delaytime = 0;

void Update()

{

delaytime = delaytime + Time.deltaTime;

Debug.Log(webCamTexture.width);

Debug.Log(webCamTexture.height);

if (delaytime >= 2f)

Process();

}

unsafe void Process()

{

...

if ((Test.width != webCamTexture.width) || Test.height != webCamTexture.height)

{

Test = new Texture2D(webCamTexture.width, webCamTexture.height, TextureFormat.ARGB32, false, false);

cube2.GetComponent<Renderer>().material.mainTexture = Test;

Debug.Log("Fixed Texture dimension");

}

...

}

The codes are already upload and you can watch on this website [link: https://codeshare.io/G6gkjz] – Shing Ming Oct 07 '18 at 09:38