i have the following code. df3 is created using the following code.i want to get the minimum value of distance_n and also the entire row containing that minimum value .

//it give just the min value , but i want entire row containing that min value

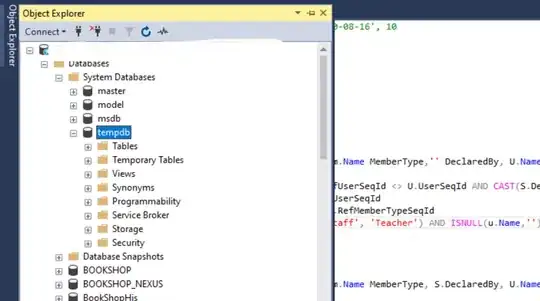

for getting the entire row , i converted this df3 to table for performing spark.sql

if i do like this spark.sql("select latitude,longitude,speed,min(distance_n) from table1").show()

and if spark.sql("select latitude,longitude,speed,min(distance_nd) from table180").show()

// by replacing the distance_n with distance_nd it throw the error

how to resolve this to get the entire row corresponding to min value