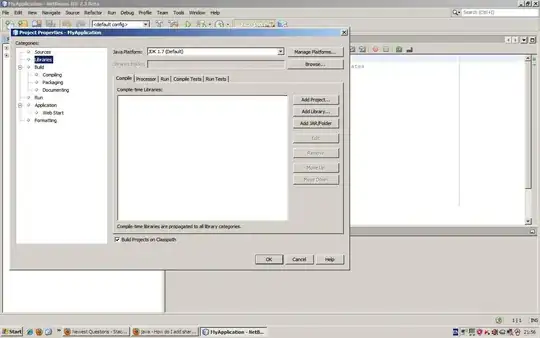

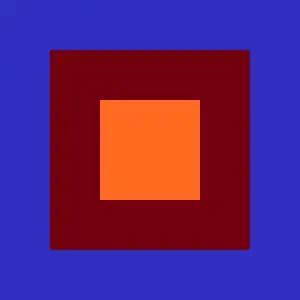

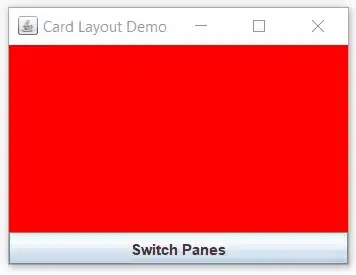

I am developing a project that has as a starting point to identify the colors of certain spots, for this I am plotting 3D graphics with the RGB colors of these images. With this I have identified some striking colors of these spots, as seen below.

Color is a matter of perception and subjectivity of interpretation. The purpose of this step is to identify so that you can find a pattern of color without differences of interpretation. With this, I have been searching the internet and for this, it is recommended to use the color space L * a * b *.

With this, can someone help me to obtain this graph with the colors LAB, or indicate another way to better classify the colors of these spots?

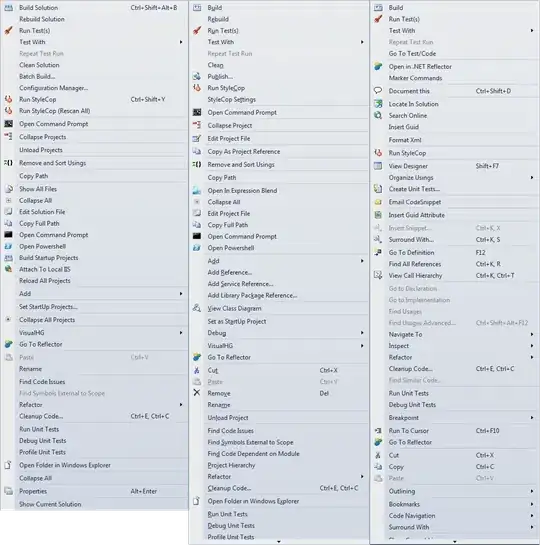

Code used to plot 3d graph

import numpy as np

import mpl_toolkits.mplot3d.axes3d as p3

import matplotlib.pyplot as plt

import colorsys

from PIL import Image

# (1) Import the file to be analyzed!

img_file = Image.open("IMD405.png")

img = img_file.load()

# (2) Get image width & height in pixels

[xs, ys] = img_file.size

max_intensity = 100

hues = {}

# (3) Examine each pixel in the image file

for x in xrange(0, xs):

for y in xrange(0, ys):

# (4) Get the RGB color of the pixel

[r, g, b] = img[x, y]

# (5) Normalize pixel color values

r /= 255.0

g /= 255.0

b /= 255.0

# (6) Convert RGB color to HSV

[h, s, v] = colorsys.rgb_to_hsv(r, g, b)

# (7) Marginalize s; count how many pixels have matching (h, v)

if h not in hues:

hues[h] = {}

if v not in hues[h]:

hues[h][v] = 1

else:

if hues[h][v] < max_intensity:

hues[h][v] += 1

# (8) Decompose the hues object into a set of one dimensional arrays we can use with matplotlib

h_ = []

v_ = []

i = []

colours = []

for h in hues:

for v in hues[h]:

h_.append(h)

v_.append(v)

i.append(hues[h][v])

[r, g, b] = colorsys.hsv_to_rgb(h, 1, v)

colours.append([r, g, b])

# (9) Plot the graph!

fig = plt.figure()

ax = p3.Axes3D(fig)

ax.scatter(h_, v_, i, s=5, c=colours, lw=0)

ax.set_xlabel('Hue')

ax.set_ylabel('Value')

ax.set_zlabel('Intensity')

fig.add_axes(ax)

plt.savefig('plot-IMD405.png')

plt.show()