I want one dag starts after completion of another dag. one solution is using external sensor function, below you can find my solution. the problem I encounter is that the dependent dag is stuck at poking, I checked this answer and made sure that both of the dags runs on the same schedule, my simplified code is as follows: any help would be appreciated. leader dag:

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(2015, 6, 1),

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

schedule = '* * * * *'

dag = DAG('leader_dag', default_args=default_args,catchup=False,

schedule_interval=schedule)

t1 = BashOperator(

task_id='print_date',

bash_command='date',

dag=dag)

the dependent dag:

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from datetime import datetime, timedelta

from airflow.operators.sensors import ExternalTaskSensor

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(2018, 10, 8),

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

schedule='* * * * *'

dag = DAG('dependent_dag', default_args=default_args, catchup=False,

schedule_interval=schedule)

wait_for_task = ExternalTaskSensor(task_id = 'wait_for_task',

external_dag_id = 'leader_dag', external_task_id='t1', dag=dag)

t1 = BashOperator(

task_id='print_date',

bash_command='date',

dag=dag)

t1.set_upstream(wait_for_task)

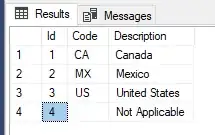

the log for dependent dag: