I am following the solutions from here: How can I return a JavaScript string from a WebAssembly function and here: How to return a string (or similar) from Rust in WebAssembly?

However, when reading from memory I am not getting the desired results.

AssemblyScript file, helloWorldModule.ts:

export function getMessageLocation(): string {

return "Hello World";

}

index.html:

<script>

fetch("helloWorldModule.wasm").then(response =>

response.arrayBuffer()

).then(bytes =>

WebAssembly.instantiate(bytes, {imports: {}})

).then(results => {

var linearMemory = results.instance.exports.memory;

var offset = results.instance.exports.getMessageLocation();

var stringBuffer = new Uint8Array(linearMemory.buffer, offset, 11);

let str = '';

for (let i=0; i<stringBuffer.length; i++) {

str += String.fromCharCode(stringBuffer[i]);

}

debugger;

});

</script>

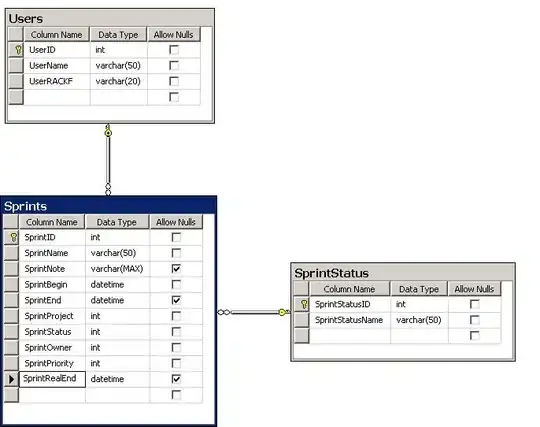

This returns an offset of 32. And finally yields a string that starts too early and has spaces between each letter of "Hello World":

However, if I change the array to an Int16Array, and add 8 to the offset (which was 32), to make an offset of 40. Like so:

<script>

fetch("helloWorldModule.wasm").then(response =>

response.arrayBuffer()

).then(bytes =>

WebAssembly.instantiate(bytes, {imports: {}})

).then(results => {

var linearMemory = results.instance.exports.memory;

var offset = results.instance.exports.getMessageLocation();

var stringBuffer = new Int16Array(linearMemory.buffer, offset+8, 11);

let str = '';

for (let i=0; i<stringBuffer.length; i++) {

str += String.fromCharCode(stringBuffer[i]);

}

debugger;

});

</script>

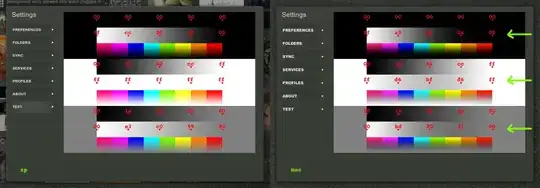

Then we get the correct result:

Why does the first set of code not work like its supposed to in the links I provided? Why do I need to change it to work with Int16Array to get rid of the space between "H" and "e" for example? Why do I need to add 8 bytes to the offset?

In summary, what on earth is going on here?

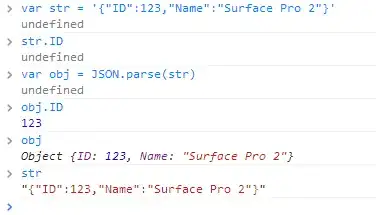

Edit: Another clue, is if I use a TextDecoder on the UInt8 array, decoding as UTF-16 looks more correct than decoding as UTF-8: