I stumbled upon this question and am trying to perform perspective transformation with Python Pillow.

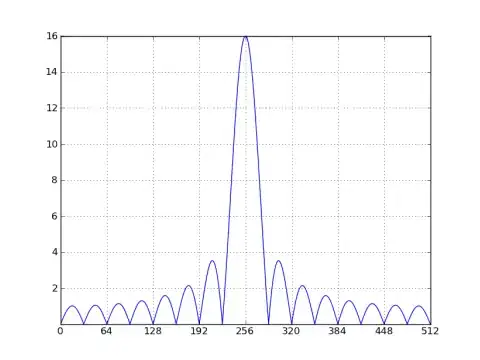

This is specifically what I am trying to do and what the result looks like:

And this is the code that I used to try it:

from PIL import Image

import numpy

# function copy-pasted from https://stackoverflow.com/a/14178717/744230

def find_coeffs(pa, pb):

matrix = []

for p1, p2 in zip(pa, pb):

matrix.append([p1[0], p1[1], 1, 0, 0, 0, -p2[0]*p1[0], -p2[0]*p1[1]])

matrix.append([0, 0, 0, p1[0], p1[1], 1, -p2[1]*p1[0], -p2[1]*p1[1]])

A = numpy.matrix(matrix, dtype=numpy.float)

B = numpy.array(pb).reshape(8)

res = numpy.dot(numpy.linalg.inv(A.T * A) * A.T, B)

return numpy.array(res).reshape(8)

# test.png is a 256x256 white square

img = Image.open("./images/test.png")

coeffs = find_coeffs(

[(0, 0), (256, 0), (256, 256), (0, 256)],

[(15, 115), (140, 20), (140, 340), (15, 250)])

img.transform((300, 400), Image.PERSPECTIVE, coeffs,

Image.BICUBIC).show()

I am not exactly sure how the transformation works, but it seems the points move quite into the opposite direction (e.g. I need to do (-15, 115) to make point A move to the right. However, it also won't move 15 pixels but 5).

How can I determine the exact coordinates of the target points to skew the image properly?