I am trying to build an OCR application and using openCV in Python for a part of it. For preparing the training set, I have an image strip containing a word and I wish to supply that strip to an openCV process along with the label (the actual word in the string) such that the opencv process shall find correct contours corresponding to each character in the word.

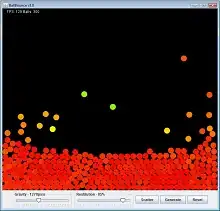

The issue I am facing is the identification of wrong contours. The pictures shown here reveal how. The text (AQR2035012) written on left is the label corresponding same as text in the image. It can be seen that extracted characters corresponding to erratic contours are getting mapped.

I would request opencv masters here to suggest me on where I am doing wrong. Thanks.

The code follows:

image=cv2.imread(os.path.join(training_images_folder,imagefolder,filename))

image = cv2.GaussianBlur(image, (5, 5), 0)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 150, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

contours = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = contours[0] if imutils.is_cv2() else contours[1]

letter_image_regions = []

for contour in contours:

(x,y,w,h) = cv2.boundingRect(contour)

if(w/h>0.5):

trim_width=int(w / 2)

letter_image_regions.append((x,y,trim_width,h))

letter_image_regions.append((x + trim_width, y, trim_width, h))

else:

letter_image_regions.append((x,y,w,h))

for letter_bounding_box, letter_text in zip(letter_image_regions, extracted_label):

x,y,w,h = letter_bounding_box

letter_image = gray[y-2:y+h+2, x-2:x+w+2]

print(extracted_label, letter_text)

cv2.imshow('img',letter_image)

cv2.waitKey()

And here is the original image