I have the below image of a single drivers license, I want to extract information about the drivers license, name, DOB etc. My thought process is to find a way to group them line by line, and crop out the single rectangle that contains name, license, etc for eng and ara. But I have failed woefully.

import cv2

import os

import numpy as np

scan_dir = os.path.dirname(__file__)

image_dir = os.path.join(scan_dir, '../../images')

class Loader(object):

def __init__(self, filename, gray=True):

self.filename = filename

self.gray = gray

self.image = None

def _read(self, filename):

rgba = cv2.imread(os.path.join(image_dir, filename))

if rgba is None:

raise Exception("Image not found")

if self.gray:

gray = cv2.cvtColor(rgba, cv2.COLOR_BGR2GRAY)

return gray, rgba

def __call__(self):

return self._read(self.filename)

class ImageScaler(object):

def __call__(self, gray, rgba, scale_factor = 2):

img_small_gray = cv2.resize(gray, None, fx=scale_factor, fy=scale_factor, interpolation=cv2.INTER_AREA)

img_small_rgba = cv2.resize(rgba, None, fx=scale_factor, fy=scale_factor, interpolation=cv2.INTER_AREA)

return img_small_gray, img_small_rgba

class BoxLocator(object):

def __call__(self, gray, rgba):

# image_blur = cv2.medianBlur(gray, 1)

ret, image_binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

image_not = cv2.bitwise_not(image_binary)

erode_kernel = np.ones((3, 1), np.uint8)

image_erode = cv2.erode(image_not, erode_kernel, iterations = 5)

dilate_kernel = np.ones((5,5), np.uint8)

image_dilate = cv2.dilate(image_erode, dilate_kernel, iterations=5)

kernel = np.ones((3, 3), np.uint8)

image_closed = cv2.morphologyEx(image_dilate, cv2.MORPH_CLOSE, kernel)

image_open = cv2.morphologyEx(image_closed, cv2.MORPH_OPEN, kernel)

image_not = cv2.bitwise_not(image_open)

image_not = cv2.adaptiveThreshold(image_not, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, 15, -2)

image_dilate = cv2.dilate(image_not, np.ones((2, 1)), iterations=1)

image_dilate = cv2.dilate(image_dilate, np.ones((2, 10)), iterations=1)

image, contours, heirarchy = cv2.findContours(image_dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for contour in contours:

x, y, w, h = cv2.boundingRect(contour)

# if w > 30 and h > 10:

cv2.rectangle(rgba, (x, y), (x + w, y + h), (0, 0, 255), 2)

return image_dilate, rgba

def entry():

loader = Loader('sample-004.jpg')

# loader = Loader('sample-004.jpg')

gray, rgba = loader()

imageScaler = ImageScaler()

image_scaled_gray, image_scaled_rgba = imageScaler(gray, rgba, 1)

box_locator = BoxLocator()

gray, rgba = box_locator(image_scaled_gray, image_scaled_rgba)

cv2.namedWindow('Image', cv2.WINDOW_NORMAL)

cv2.namedWindow('Image2', cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image', 600, 600)

cv2.resizeWindow('Image2', 600, 600)

cv2.imshow("Image2", rgba)

cv2.imshow("Image", gray)

cv2.moveWindow('Image', 0, 0)

cv2.moveWindow('Image2', 600, 0)

cv2.waitKey()

cv2.destroyAllWindows()

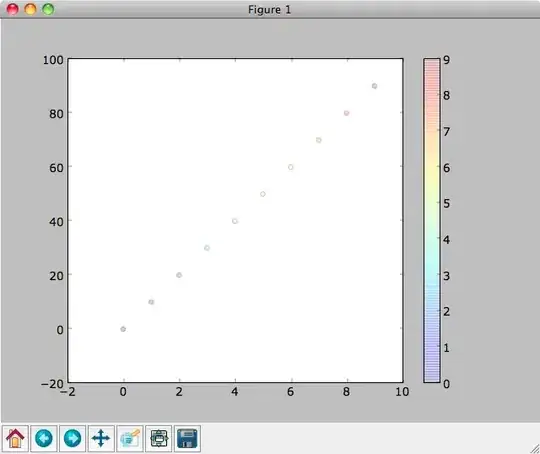

When I run the above code I get the below segmentation. Which is not close to what I want