setup

library(lme4)

library(MuMIn)

database <- mtcars

database$wt <- scale(mtcars$wt)

database$am <- factor(mtcars$am) ## <== note the difference here. It is a factor not numeric

model.1 <- glmer(vs ~ wt + am + (1|carb), database, family = binomial, na.action = "na.fail")

model.1.set <- dredge(model.1, rank = "AICc")

top.models.1 <- get.models(model.1.set,subset = delta<10)

model.1.avg <- model.avg(top.models.1)

nPoints <- 100

wt_pred_data <- data.frame(wt = seq(min(database$wt), max(database$wt), length = nPoints),

am = database$am[which.min(database$am)], #Base level for the factor

var = 'wt')

am_pred_data <- data.frame(wt = mean(database$wt),

am = unique(database$am),

var = 'am')

pred_data <- rbind(wt_pred_data, am_pred_data)

rm(wt_pred_data, am_pred_data)

pred_data$vs <- predict(model.1.avg, newdata = pred_data, re.form = NA, type = 'response')

actual answer

Adding to my previous answer, as Thomas seems interested in how one would deal with factors and also how one obtains confidence intervals using bootstraps.

Dealing with factors

First dealing with factors is not much harder than dealing with the numeric variables. The difference here is that

- When plotting effects on numeric variables, factors should be set to their base level (eg. for

am as a factor this would be a value of 1)

- When plotting the factors, one sets all numeric variables to their mean, and all other factors to their base level.

One method for getting the base level of a factor is factor[which.min(factor)] and yet another is factor(levels(factor)[0], levels(factor)). The ggeffects package uses some method similar to this.

bootstrapping

Now bootstrapping in practice ranges from being easy, to difficult. One can either use parametric, semi-parametric or non-parametric bootstraps.

Non-parametric bootstrap is the easiest to explain. One simply takes a sample of the original dataset (say 2/3, 3/4 or 4/5. Less can be used for "good" larger datasets), refits the model using this sample and then predicts for this new model. Then one repeats the process N times, and uses this to estimate standard deviation or quantiles and uses this for confidence intervals. There seems to be no implemented method in MuMIn to take care of this for us, so we seem to have to handle this ourselves.

Usually code gets quite messy, and using a function makes it much clearer. To my frustration the MuMIn seemed to have problems with this however, so below is a non-functional way of doing this. In this code I choose a sample size of 4/5, because the dataset size is rather small.

### ###

## Non-parametric bootstrapping ##

## Note: Gibberish with ##

## singular fit! ##

### ###

# 1) Create sub-sample from the dataset (eg 2/3, 3/4 or 4/5 of the original dataset)

# 2) refit the model using the new dataset and estimate model average using this dataset

# 3) estimate the predicted values using the refitted model

# 4) refit the model N times

nBoot <- 100

frac <- 4/5 #number of points in each sample. Better datasets can use less.

bootStraps <- vector('list', nBoot)

shutup <- function(x) #Useful helper function for making a function shut up

suppressMessages(suppressWarnings(force(x)))

ii <- seq_len(n <- nrow(database))

nn <- ceiling(frac * n)

nb <- nn * nBoot

samples <- sample(ii, nb, TRUE)

samples <- split(samples, (nn + seq_len(nb) - 1) %/% nn) #See unique((nn + seq_len(nb) - 1) %/% nn) # <= Gives 1 - 100.

#Not run:

# lengths(samples) # <== all of them are 26 long! ceiling(frac * n) = 26!

# Run the bootstraps

for(i in seq_len(nBoot)){

preds <- try({

# 1) Sample

d <- database[samples[[i]], ]

# 2) fit the model using the sample

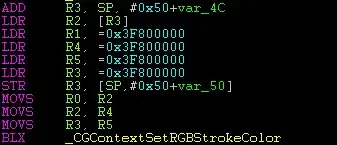

bootFit <- shutup(glmer(vs ~ wt + am + (1|carb), d, family = binomial, na.action = "na.fail"))

bootAvg <- shutup(model.avg(get.models(dredge(bootFit, rank = 'AICc'), subset = delta < 10)))

# 3) predict the data using the new model

shutup(predict(bootAvg, newdata = pred_data, re.form = NA, type = 'response'))

}, silent = TRUE)

#save the predictions for later

if(!inherits(preds, 'try-error'))

bootStraps[[i]] <- preds

# repeat N times

}

# Number of failed bootStraps:

sum(failed <- sapply(bootStraps, is.null)) #For me 44, but will be different for different datasets, samples and seeds.

bootStraps <- bootStraps[which(!failed)]

alpha <- 0.05

# 4) use the predictions for calculating bootstrapped intervals

quantiles <- apply(do.call(rbind, bootStraps), 2, quantile, probs = c(alpha / 2, 0.5, 1 - alpha / 2))

pred_data[, c('lower', 'median', 'upper')] <- t(quantiles)

pred_data[, 'type'] <- 'non-parametric'

Take note that this is of course total gibberish. The fit is singular because mtcars is not a dataset showing mixed effects, so the bootstrapping confidence intervals will be completely out of wack (the range of values are too spread out). Do also note that for such an unstable dataset as this, quite a few bootstraps fail to converge into something sensible.

For parametric bootstraps we can turn to lme4::bootMer. This function takes a single merMod model (glmer or lmer result) as well as a function to be evaluated on each parametric refit. So creating this function bootMer can take care of the rest. We are interested in the predicted values, so the function should return these. Note the similarity of the function, to the above method

### ###

## Parametric bootstraps ##

## Note: Singular fit ##

## makes this ##

## useless! ##

### ###

bootFun <- function(model){

preds <- try({

bootAvg <- shutup(model.avg(get.models(dredge(model, rank = 'AICc'), subset = delta < 10)))

shutup(predict(bootAvg, newdata = pred_data, re.form = NA, type = 'response'))

}, silent = FALSE)

if(!inherits(preds, 'try-error'))

return(preds)

return(rep(NA_real_, nrow(pred_data)))

}

boots <- bootMer(model.1, FUN = bootFun, nsim = 100, re.form = NA, type = 'parametric')

quantiles <- apply(boots$t, 2, quantile, probs = c(alpha / 2, 0.5, 1 - alpha / 2), na.rm = TRUE)

# Create data to be predicted with parametric bootstraps

pred_data_p <- pred_data

pred_data_p[, c('lower', 'median', 'upper')] <- t(quantiles)

pred_data_p[, 'type'] <- 'parametric'

pred_data <- rbind(pred_data, pred_data_p)

rm(pred_data_p)

Note again, that due to the singularity the result will be gibberish. In this case the result will be way too certain, as singularity means the model is going to be way too accurate on known data. So in practice this would make the range of every interval 0 or close enough that it makes no difference.

Finally we just need to plot the results. We can use facet_wrap to compare the parametric and non-parametric results. Note again, that for this specific dataset, it is very much gibberish to compare the confidence intervals which are both completely useless.

Note that for the factor am i use geom_point and geom_errorbar where i use geom_line and geom_ribbon for numeric values, to better represent the grouped nature of a factor compared to the continuous nature of a numeric variable

#Finaly we can plot our result:

# wt

library(ggplot2)

ggplot(pred_data[pred_data$var == 'wt', ], aes(y = vs, x = wt)) +

geom_line() +

geom_ribbon(aes(ymax = upper, ymin = lower), alpha = 0.2) +

facet_wrap(. ~ type) +

ggtitle('gibberish numeric plot (caused by singularity in fit)')

# am

ggplot(pred_data[pred_data$var == 'am', ], aes(y = vs, x = am)) +

geom_point() +

geom_errorbar(aes(ymax = upper, ymin = lower)) +

facet_wrap(. ~ type) +

ggtitle('gibberish factor plot (caused by singularity in fit)')