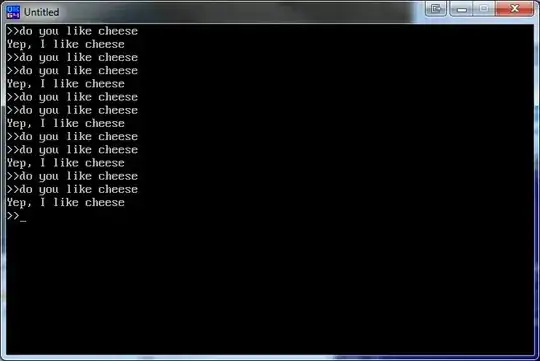

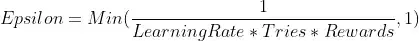

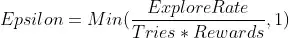

To have a dynamic epsilon, I made this formula for my application. It takes tries and rewards into account. The more rewards and tries means the less epsilon. You can adjust it using ExploreRate parameter.

Tries: Number of total tries of all strategies or all machines (step)

Rewards: Total rewards or total count of successes

Some Examples:

ExploreRate = 1000, Tries = 10, Rewards = 9 -> Epsilon = 1

Because tries are too few.

ExploreRate = 1000, Tries = 100, Rewards = 90 -> Epsilon = 0.11

Small, because we got a lot of conversions that is a sign of verification.

ExploreRate = 1000, Tries = 100, Rewards = 9 -> Epsilon = 1

Although we had a lot of tries, but it is not reliable because of low rewards. So we continue exploring.

ExploreRate = 100, Tries = 100, Rewards = 9 -> Epsilon = 0.11

The lower the ExploreRate, the faster convergence.

By increasing ExploreRate, it tends to explore more and converge slower.

By decreasing ExploreRate, it converges faster.

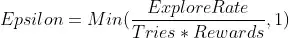

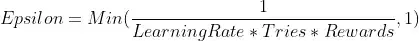

You can alternatively use learning rate instead of explore rate:

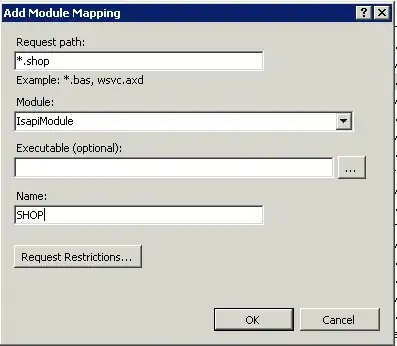

=0.3 means with a probability=0.3 the output action is randomly selected from the action space, and with probability=0.7 the output action is greedily selected based on argmax(Q).

=0.3 means with a probability=0.3 the output action is randomly selected from the action space, and with probability=0.7 the output action is greedily selected based on argmax(Q). (e.g.,

(e.g.,  (e.g.,

(e.g.,  training epoches/episodes.

Specifically, at the initial training process, we let the model more freedom to explore with a high probability (e.g.,

training epoches/episodes.

Specifically, at the initial training process, we let the model more freedom to explore with a high probability (e.g.,