import subprocess

import numpy as np

from xgboost import XGBClassifier, plot_tree

from sklearn.tree import DecisionTreeClassifier, export_graphviz

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn import metrics

import matplotlib.pyplot as plt

RANDOM_STATE = 100

params = {

'max_depth': 5,

'min_samples_leaf': 5,

'random_state': RANDOM_STATE

}

X, y = make_classification(

n_samples=1000000,

n_features=5,

random_state=RANDOM_STATE

)

Xtrain, Xtest, ytrain, ytest = train_test_split(X, y, random_state=RANDOM_STATE)

# __init__(self, max_depth=3, learning_rate=0.1,

# n_estimators=100, silent=True,

# objective='binary:logistic', booster='gbtree',

# n_jobs=1, nthread=None, gamma=0,

# min_child_weight=1, max_delta_step=0,

# subsample=1, colsample_bytree=1, colsample_bylevel=1,

# reg_alpha=0, reg_lambda=1, scale_pos_weight=1,

# base_score=0.5, random_state=0, seed=None, missing=None, **kwargs)

xgb_model = XGBClassifier(

n_estimators=1,

max_depth=3,

min_samples_leaf=5,

random_state=RANDOM_STATE

)

# __init__(self, criterion='gini',

# splitter='best', max_depth=None,

# min_samples_split=2, min_samples_leaf=1,

# min_weight_fraction_leaf=0.0, max_features=None,

# random_state=None, max_leaf_nodes=None,

# min_impurity_decrease=0.0, min_impurity_split=None,

# class_weight=None, presort=False)

sk_model = DecisionTreeClassifier(

max_depth=3,

min_samples_leaf=5,

random_state=RANDOM_STATE

)

xgb_model.fit(Xtrain, ytrain)

xgb_pred = xgb_model.predict(Xtest)

sk_model.fit(Xtrain, ytrain)

sk_pred = sk_model.predict(Xtest)

print(metrics.classification_report(ytest, xgb_pred))

print(metrics.classification_report(ytest, sk_pred))

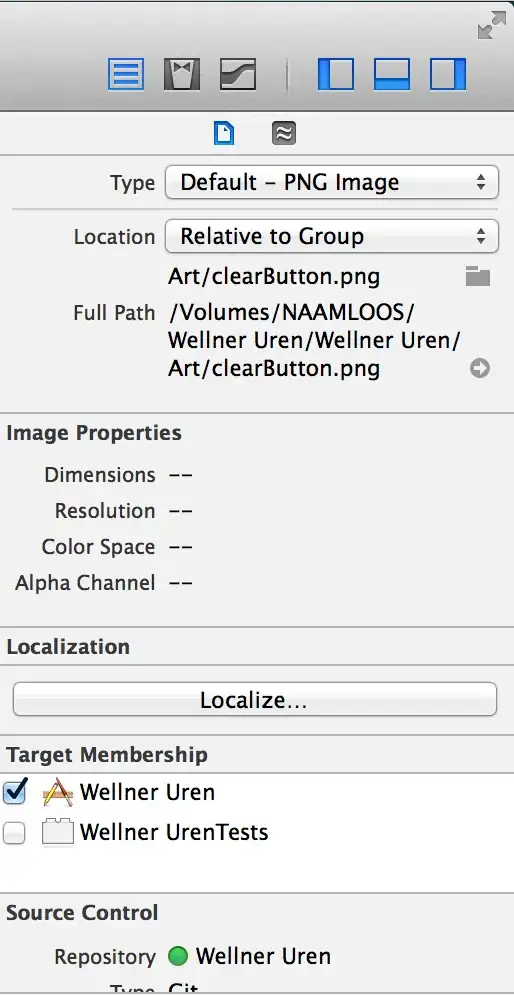

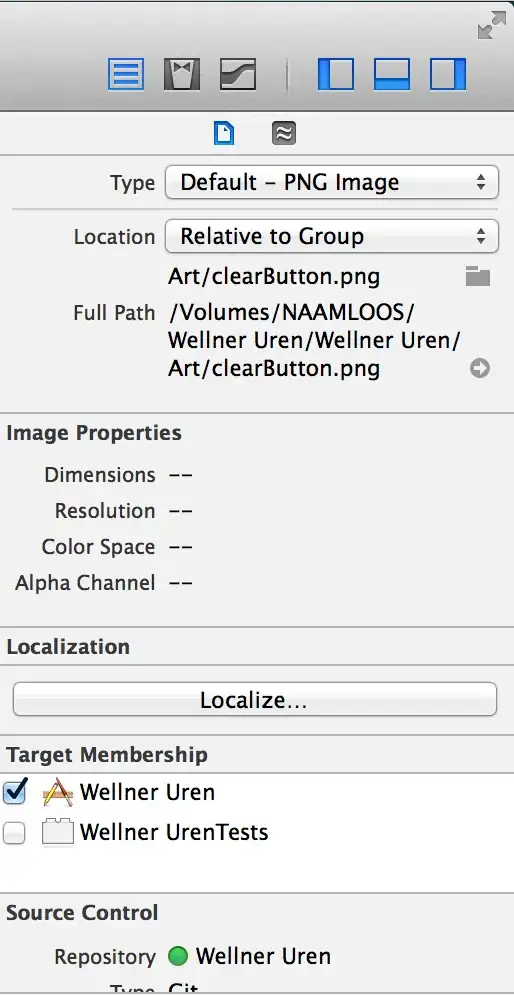

plot_tree(xgb_model, rankdir='LR'); plt.show()

export_graphviz(sk_model, 'sk_model.dot'); subprocess.call('dot -Tpng sk_model.dot -o sk_model.png'.split())

Some performance metrics (I know, I didn't calibrate the classifiers totally)...

>>> print(metrics.classification_report(ytest, xgb_pred))

precision recall f1-score support

0 0.86 0.82 0.84 125036

1 0.83 0.87 0.85 124964

micro avg 0.85 0.85 0.85 250000

macro avg 0.85 0.85 0.85 250000

weighted avg 0.85 0.85 0.85 250000

>>> print(metrics.classification_report(ytest, sk_pred))

precision recall f1-score support

0 0.86 0.82 0.84 125036

1 0.83 0.87 0.85 124964

micro avg 0.85 0.85 0.85 250000

macro avg 0.85 0.85 0.85 250000

weighted avg 0.85 0.85 0.85 250000

And some pictures:

So, barring any investigate mistakes/overgeneralizations, an XGBClassifier (and, I would assume, Regressor) with one estimator seems identical to a scikit-learn DecisionTreeClassifier with the same shared parameters.