I created a cluster in Azure databricks. On its DBFS(Databricks File System) I've mounted an Azure Blob Storage(container). In a notebook I read and transform data(usign PySpark), and after all this process I want to write back the transformed dataset to the Azure Blob storage. When I do so, I do it with the following command line

model_data.write.mode("overwrite").format("com.databricks.spark.csv").options(header = "True", delimiter = ",").csv("/mnt/flights/model_data.csv")

Also tried

model_data.coalesce(1).write.mode("overwrite").format("com.databricks.spark.csv").options(header = "True", delimiter = ",").save("/mnt/flights/model_data.csv")

but I couldn't get the result I wanted, which was to write the model_data dataframe as model_data.csv in the container I mounted previously.

The result is always

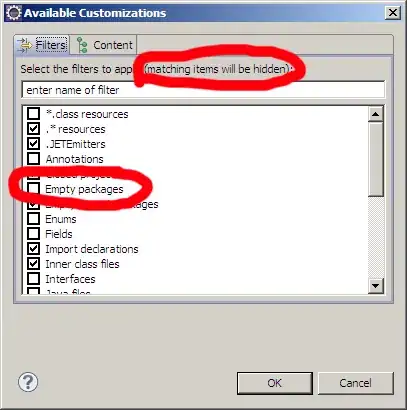

This picture is how the container looks like in the azure blob storage.

A file with a pseudorandom name like "part-xxxxxxxxxx.csv" is created.

Thanks!