TL;DR: The Windows terminal hates Unicode. You can work around it, but it's not pretty.

Your issues here are unrelated to "char versus wchar_t". In fact, there's nothing wrong with your program! The problems only arise when the text leaves through cout and arrives at the terminal.

You're probably used to thinking of a char as a "character"; this is a common (but understandable) misconception. In C/C++, the char type is usually synonymous with an 8-bit integer, and thus is more accurately described as a byte.

Your text file chineseVocab.txt is encoded as UTF-8. When you read this file via fstream, what you get is a string of UTF-8-encoded bytes.

There is no such thing as a "character" in I/O; you're always transmitting bytes in a particular encoding. In your example, you are reading UTF-8-encoded bytes from a file handle (fin).

Try running this, and you should see identical results on both platforms (Windows and Linux):

int main()

{

fstream fin("chineseVocab.txt");

string line;

while (getline(fin, line))

{

cout << "Number of bytes in the line: " << dec << line.length() << endl;

cout << " ";

for (char c : line)

{

// Here we need to trick the compiler into displaying this "char" as an integer:

unsigned int byte = (unsigned char)c;

cout << hex << byte << " ";

}

cout << endl;

cout << endl;

}

return 0;

}

Here's what I see in mine (Windows):

Number of bytes in the line: 16

e4 ba ba 28 72 c3 a9 6e 29 2c 70 65 72 73 6f 6e

Number of bytes in the line: 15

e5 88 80 28 64 c4 81 6f 29 2c 6b 6e 69 66 65

Number of bytes in the line: 14

e5 8a 9b 28 6c c3 ac 29 2c 70 6f 77 65 72

Number of bytes in the line: 27

e5 8f 88 28 79 c3 b2 75 29 2c 72 69 67 68 74 20 68 61 6e 64 3b 20 61 67 61 69 6e

Number of bytes in the line: 15

e5 8f a3 28 6b c7 92 75 29 2c 6d 6f 75 74 68

So far, so good.

The problem starts now: you want to write those same UTF-8-encoded bytes to another file handle (cout).

The cout file handle is connected to your CLI (the "terminal", the "console", the "shell", whatever you wanna call it). The CLI reads bytes from cout and decodes them into characters so they can be displayed.

Linux terminals are usually configured to use a UTF-8 decoder. Good news! Your bytes are UTF-8-encoded, so your Linux terminal's decoder matches the text file's encoding. That's why everything looks good in the terminal.

Windows terminals, on the other hand, are usually configured to use a system-dependent decoder (yours appears to be DOS codepage 437). Bad news! Your bytes are UTF-8-encoded, so your Windows terminal's decoder does not match the text file's encoding. That's why everything looks garbled in the terminal.

OK, so how do you solve this? Unfortunately, I couldn't find any portable way to do it... You will need to fork your program into a Linux version and a Windows version. In the Windows version:

- Convert your UTF-8 bytes into UTF-16 code units.

- Set standard output to UTF-16 mode.

- Write to

wcout instead of cout

- Tell your users to change their terminals to a font that supports Chinese characters.

Here's the code:

#include <fstream>

#include <iostream>

#include <string>

#include <windows.h>

#include <fcntl.h>

#include <io.h>

#include <stdio.h>

using namespace std;

// Based on this article:

// https://msdn.microsoft.com/magazine/mt763237?f=255&MSPPError=-2147217396

wstring utf16FromUtf8(const string & utf8)

{

std::wstring utf16;

// Empty input --> empty output

if (utf8.length() == 0)

return utf16;

// Reject the string if its bytes do not constitute valid UTF-8

constexpr DWORD kFlags = MB_ERR_INVALID_CHARS;

// Compute how many 16-bit code units are needed to store this string:

const int nCodeUnits = ::MultiByteToWideChar(

CP_UTF8, // Source string is in UTF-8

kFlags, // Conversion flags

utf8.data(), // Source UTF-8 string pointer

utf8.length(), // Length of the source UTF-8 string, in bytes

nullptr, // Unused - no conversion done in this step

0 // Request size of destination buffer, in wchar_ts

);

// Invalid UTF-8 detected? Return empty string:

if (!nCodeUnits)

return utf16;

// Allocate space for the UTF-16 code units:

utf16.resize(nCodeUnits);

// Convert from UTF-8 to UTF-16

int result = ::MultiByteToWideChar(

CP_UTF8, // Source string is in UTF-8

kFlags, // Conversion flags

utf8.data(), // Source UTF-8 string pointer

utf8.length(), // Length of source UTF-8 string, in bytes

&utf16[0], // Pointer to destination buffer

nCodeUnits // Size of destination buffer, in code units

);

return utf16;

}

int main()

{

// Based on this article:

// https://blogs.msmvps.com/gdicanio/2017/08/22/printing-utf-8-text-to-the-windows-console/

_setmode(_fileno(stdout), _O_U16TEXT);

fstream fin("chineseVocab.txt");

string line;

while (getline(fin, line))

wcout << utf16FromUtf8(line) << endl;

return 0;

}

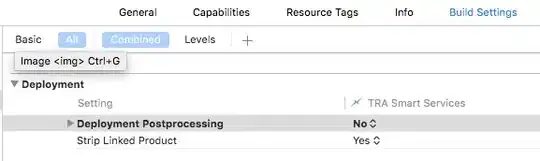

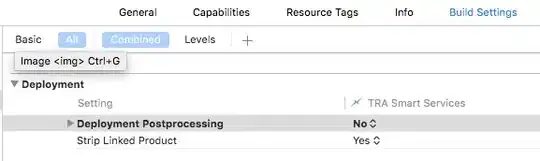

In my terminal, it mostly looks OK after I change the font to MS Gothic:

Some characters are still messed up, but that's due to the font not supporting them.