I need to write Spark sql query with inner select and partition by. Problem is that I have AnalysisException. I already spend few hours on this but with other approach I have no success.

Exception:

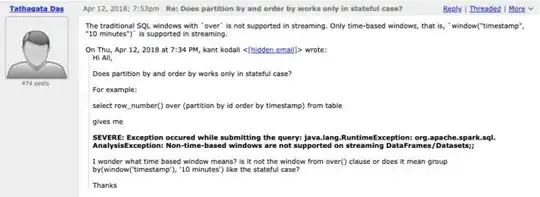

Exception in thread "main" org.apache.spark.sql.AnalysisException: Non-time-based windows are not supported on streaming DataFrames/Datasets;;

Window [sum(cast(_w0#41 as bigint)) windowspecdefinition(deviceId#28, timestamp#30 ASC NULLS FIRST, RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS grp#34L], [deviceId#28], [timestamp#30 ASC NULLS FIRST]

+- Project [currentTemperature#27, deviceId#28, status#29, timestamp#30, wantedTemperature#31, CASE WHEN (status#29 = cast(false as boolean)) THEN 1 ELSE 0 END AS _w0#41]

I assume that this is too complicated query to implement like this. But i don't know to to fix it.

SparkSession spark = SparkUtils.getSparkSession("RawModel");

Dataset<RawModel> datasetMap = readFromKafka(spark);

datasetMap.registerTempTable("test");

Dataset<Row> res = datasetMap.sqlContext().sql("" +

" select deviceId, grp, avg(currentTemperature) as averageT, min(timestamp) as minTime ,max(timestamp) as maxTime, count(*) as countFrame " +

" from (select test.*, sum(case when status = 'false' then 1 else 0 end) over (partition by deviceId order by timestamp) as grp " +

" from test " +

" ) test " +

" group by deviceid, grp ");

Any suggestion would be very appreciated. Thank you.