I changed the getting started example of Tensorflow as following:

import tensorflow as tf

from sklearn.metrics import roc_auc_score

import numpy as np

import commons as cm

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sn

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation=tf.nn.tanh),

# tf.keras.layers.Dense(512, activation=tf.nn.tanh),

# tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation=tf.nn.tanh)

])

model.compile(optimizer='adam',

loss='mean_squared_error',

# loss = 'sparse_categorical_crossentropy',

metrics=['accuracy'])

history = cm.Histories()

h= model.fit(x_train, y_train, epochs=50, callbacks=[history])

print("history:", history.losses)

cm.plot_history(h)

# cm.plot(history.losses, history.aucs)

test_predictions = model.predict(x_test)

# Compute confusion matrix

pred = np.argmax(test_predictions,axis=1)

pred2 = model.predict_classes(x_test)

confusion = confusion_matrix(y_test, pred)

cm.draw_confusion(confusion,range(10))

With its default parameters:

reluactivation at hidden layers,softmaxat the output layer andsparse_categorical_crossentropyas loss function,

it works fine and the prediction for all digits are above 99%

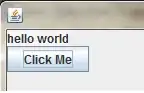

However with my parameters: tanh activation function and mean_squared_error loss function it just predict 0 for all test samples:

I wonder what is the problem? The accuracy rate is increasing for each epoch and it reaches 99% and loss is about 20