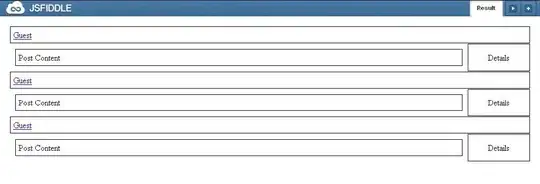

My ultimate goal is to web scrape the Standings page of The Puzzled Pint for Montreal.

I think I need to dynamically scrape (e.g. use RSelenium) since the table I'm interested in is a JavaScript iframe - part of a web page that displays content independent of its container.

Some have suggested that scraping directly from the source of these iframes is the way to go. I used the web developer Inspector tool in my firefox browser to find the src= which happens to be Google Sheets.

First, use robots.txt to make sure we're allowed to scrape it from Google Sheets:

library(robotstxt)

paths_allowed("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=203220308")

Now that I know I have permission, I tried the RCurl package. It's simple to get the first page:

library(RCurl)

sheet <- getForm("https://docs.google.com/spreadsheet/pub", hl = "en_US", key = "1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U", output = "csv", .opts = list(followlocation = TRUE, verbose = TRUE, ssl.verifypeer = FALSE))

df <- read.csv(textConnection(sheet))

head(df)

However, when you click any of the other Month/Year links on this Google Sheet the gid= of the url changes. For example, for October 2018 it's now:

https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=1367583807

I'm not sure if it's possible to scrape widget's with RCurl? If it is I'd love to hear how.

So it looks like I will most likely need to use RSelenium to do this.

library(RSelenium)

# connect to a running server

remDr <- remoteDriver(

remoteServerAddr = "192.168.99.100",

port = 4445L

)

remDr$open()

# navigate to the site of interest

remDr$navigate("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=203220308")

My problem is trying to get the HTML for the table on this page, the following was suggested on SO but doesn't work for me (It doesn't return the expected output, just Month/Year metadata from the links/elements)?

library(XML)

doc <- htmlParse(remDr$getPageSource()[[1]])

readHTMLTable(doc)

I believe I need to navigate to the inner frame but not sure how to do this?

For example, when looking for the CSS tag for this table with SelectorGadget in chrome it gives me a warning that it's an iframe and to be able to select within it I need to click a link.

When I use this link with readHTMLTable() I get the correct information I want:

remDr$navigate("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pubhtml/sheet?headers=false&gid=203220308")

doc <- htmlParse(remDr$getPageSource()[[1]])

readHTMLTable(doc)

This presents a problem as I need to use RSelenium to navigate through the different pages/tables of the previous link (the iframe widget):

remDr$navigate("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=203220308")

To navigate through the different pages/tables I use SelectorGadget to find the CSS tags

# find all elements/links

webElems <- remDr$findElements(using = "css", ".switcherItem")

# Select the first link (October 2018)

webElem_01 <- webElems[[1]]

Then using TightVNC viewer I verified I was highlighting the correct element then "click" the element (in this case the October 2018 link).

webElem_01$highlightElement()

webElem_01$clickElement()

Since I can see that the page changed on TightVNC I assume there would be no more steps required before capturing/scraping here but as mentioned I need a way of programmatically navigating to the inner iframe of each of these pages.

UPDATE

Okay I figured out how to navigate to the inner frame using the remDr$switchToFrame() command but I cannot seem to figure out how to navigate back to the outer frame in order to "click" the next link and repeat the process. My current hacky attempt would involve me navigating back to the main page and repeating this process many times:

# navigate to the main page

remDr$navigate("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=690408156")

# look for table

tableElem <- remDr$findElement(using = "id", "pageswitcher-content")

# switch to table

remDr$switchToFrame(tableElem)

# parse html

doc <- htmlParse(remDr$getPageSource()[[1]])

readHTMLTable(doc)

# how do I switch back to the outer frame?

# the remDr$goBack() command doesn't seem to do this

# workaround is to navigate back to the main page then navigate back to the second page and repeat process

remDr$navigate("https://docs.google.com/spreadsheets/d/1o1PlLIQS8v-XSuEz1eqZB80kcJk9xg5lsbueB7mTg1U/pub?output=html&widget=true#gid=690408156")

webElems <- remDr$findElements(using = "css", ".switcherItem")

webElem_01 <- webElems[[1]]

webElem_01$clickElement()

tableElem <- remDr$findElement(using = "id", "pageswitcher-content")

# switch to table

remDr$switchToFrame(tableElem)

# parse html

doc2 <- htmlParse(remDr$getPageSource()[[1]])

readHTMLTable(doc2)