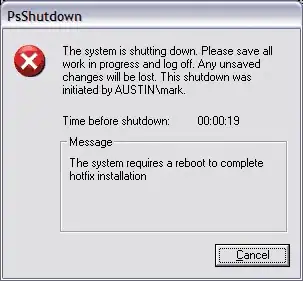

Let our model as several fully-connected layers:

I want to share middle layers and use two models with same weights like this:

Can I make this with Tensorflow?

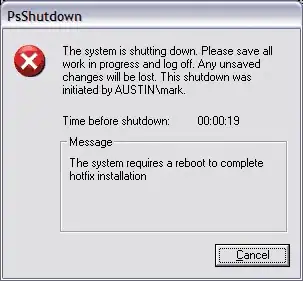

Let our model as several fully-connected layers:

I want to share middle layers and use two models with same weights like this:

Can I make this with Tensorflow?

Yes, you can share layers! The official TensorFlow tutorial is here.

In your case, layer sharing could be implemented using variable scopes

#Create network starts, which are not shared

start_of_net1 = create_start_of_net1(my_inputs_for_net1)

start_of_net2 = create_start_of_net2(my_inputs_for_net2)

#Create shared middle layers

#Start by creating a middle layer for one of the networks in a variable scope

with tf.variable_scope("shared_middle", reuse=False) as scope:

middle_of_net1 = create_middle(start_of_net1)

#Share the variables by using the same scope name and reuse=True

#when creating those layers for your other network

with tf.variable_scope("shared_middle", reuse=True) as scope:

middle_of_net2 = create_middle(start_of_net2)

#Create end layers, which are not shared

end_of_net1 = create_end_of_net1(middle_of_net1)

end_of_net2 = create_end_of_net2(middle_of_net2)

Once a layer has been created in a variable scope, you can reuse the variables in that layer as many times as you like. Here, we just reuse them once.