I have been searching the internet for hours on some documentation on how to understand the Euler angles returned by cv2.decomposeProjectionMatrix.

My problem seems simple, I have this 2D image of an aircraft.

I want to be able to derive from that image how the aircraft is oriented with respect to the camera. Ultimately, I am looking for the Look and Depression (i.e. Azimuth and Elevation). I have corresponding 3D coordinates to the 2D features selected in my image - listed below in my code.

I want to be able to derive from that image how the aircraft is oriented with respect to the camera. Ultimately, I am looking for the Look and Depression (i.e. Azimuth and Elevation). I have corresponding 3D coordinates to the 2D features selected in my image - listed below in my code.

# --- Imports ---

import os

import cv2

import numpy as np

# --- Main ---

if __name__ == "__main__":

# Load image and resize

THIS_DIR = os.path.abspath(os.path.dirname(__file__))

im = cv2.imread(os.path.abspath(os.path.join(THIS_DIR, "raptor.jpg")))

im = cv2.resize(im, (im.shape[1]//2, im.shape[0]//2))

size = im.shape

# 2D image points

image_points = np.array([

(230, 410), # Nose

(55, 215), # right forward wingtip

(227, 170), # right aft outboard horizontal

(257, 71), # right forward vertical tail

(532, 96), # left forward vertical tail

(605, 210), # left aft outboard horizontal

(700, 283) # left forward wingtip

], dtype="double")

# 3D model points (estimated)

model_points = np.array([

( 0., -484.1, -18.4), # Nose

(-758.1, 872.4, -15.9), # right forward wingtip

(-470.3, 1409.4, -7.9), # right aft outboard horizontal

(-287.3, 1040.2, 323.3), # right forward vertical tail

( 287.3, 1040.2, 323.3), # left forward vertical tail

( 470.3, 1409.4, -7.9), # left aft outboard horizontal

( 758.1, 872.4, -15.9) # left forward wingtip

], dtype="double")

# Estimated camera internals

focal_length = size[1]

center = (size[1]/2, size[0]/2)

camera_matrix = np.array(

[[focal_length, 0, center[0]],

[0, focal_length, center[1]],

[0, 0, 1]], dtype = "double"

)

# Lens distortion assumed to be zero

dist_coeffs = np.zeros((4,1))

# Solving for persepective and point

_, rvec, tvec = cv2.solvePnP(model_points, image_points, camera_matrix, dist_coeffs, flags=cv2.SOLVEPNP_ITERATIVE)

# Rotational matrix

rmat = cv2.Rodrigues(rvec)[0]

# Projection Matrix

pmat = np.hstack((rmat, tvec))

roll, pitch, yaw = cv2.decomposeProjectionMatrix(pmat)[-1]

print('Roll: {:.2f}\nPitch: {:.2f}\nYaw: {:.2f}'.format(float(roll), float(pitch), float(yaw)))

# Visualization

# Points of interest from the nose

poi = np.array([(model_points[0][0], model_points[0][1]+1e6, model_points[0][2])])

poi_end, jacobian = cv2.projectPoints(poi, rvec, tvec, camera_matrix, dist_coeffs)

p1 = ( int(image_points[0][0]), int(image_points[0][1]) ) # nose

p2 = ( int(poi_end[0][0][0]), int(poi_end[0][0][1]) ) # poi

# Show the 2D features

for p in image_points:

cv2.circle(im, (int(p[0]), int(p[1])), 3, (0,0,255), -1)

# Line from nose to projected point

cv2.line(im, p1, p2, (255,0,0), 2)

cv2.imshow("Output", im)

cv2.waitKey(0)

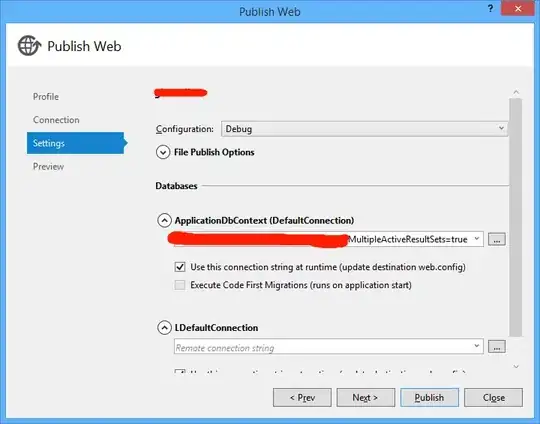

Below is my output image, as you can see the point projected aft does not seem to follow the centerline. I'm not entirely certain that my code is working as I intended so please feel free to offer helpful inputs.

Thanks in advance!!