The partitions created for the below word count program is 10, but as per my understanding if we set master("local[2]") while creating a sparksession object means it will run locally with 2 cores i.e 2 partitions

Can someone help me why my spark code is creating 10 partitions instead of creating 2.

CODE :

SparkSession spark = SparkSession.builder().appName("JavaWordCount").master("local[2]").getOrCreate();

JavaRDD<String> lines = spark.read().textFile(args[0]).javaRDD();

JavaRDD<String> words = lines.flatMap(s -> Arrays.asList(SPACE.split(s)).iterator());

JavaPairRDD<String, Double> pairRDD = words.mapToPair(s -> new Tuple2<>(s, 1.0));

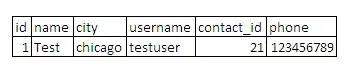

ScreenShort of Spark Web UI :