I am doing transfer learning using a pre-trained inception-resnet-v2 model. From one of the conv layers I am extracting the best activation (best quality) to calculate the predicted landmarks using opencv and numpy operations. The loss function I am applying is the mean_squared_error loss. Unfortunately, when I am executing this function I get an error message that no gradients are available for any of the variables. I am struggling with this problem since two weeks and I don't know how to proceed. While debugging I could see that the problem occurred when the apply_gradients function gets executed internally. I have searched and used some solutions from here like this ones: ValueError: No gradients provided for any variable in Tensorflow selecting trainable variables to compute gradient "No variables to optimize" Tensorflow: How to replace or modify gradient? ...

In addition, I have tried to write my own operation with gradient support, using this awesome tutorial: https://code-examples.net/en/q/253d718, because this solution wraps my python and opencv code in tensorflow. Unfortunately, the issue still remains. Tracing the path from the output of the network to the mean_squared_error function using TensorBoard, I could see that the path is available and continuously, too.

# Extracts the best predicted images from a specific activation

layer

# PYTHON function: get_best_images(...) -> uses numpy and opencv

# PYTHON function: extract_landmarks(...) -> uses numpy

# Endpoints is the conv layer that gets extracted

best_predicted = tf.py_func(get_best_images, [input,

end_points['Conv2d_1a_3x3']], tf.uint8) # Gets best activation

best_predicted.set_shape(input.shape)

# Gets the predicted landmarks and processes both target and

predicted for further calculation

proc_landmarks = tf.py_func(get_landmarks, [best_predicted,

target_landmarks], [tf.int32, tf.int32])

proc_landmarks[0].set_shape(target_landmarks.shape)

# target landmarks

proc_landmarks[1].set_shape(target_landmarks.shape)

# predicted landmarks

# --> HERE COMES THE COMPUTATION TO PROCESS THE TARGET AND PREDICTED

LANDMARKS

# Flattens and reshapes the tensors to 1D (68,1)

target_flatten = tf.reshape(target_result[0], [-1])

target_flatten = tf.reshape(target_flatten, [68,1])

predicted_flatten = tf.reshape(predicted_result[1], [-1])

predicted_flatten = tf.reshape(predicted_flatten, [68,1])

edit_target_landmarks = tf.cast(target_flatten, dtype=tf.float32)

edit_predicted_landmarks = tf.cast(predicted_flatten,

dtype=tf.float32)

# Calculating the loss

mse_loss =

tf.losses.mean_squared_error(labels=edit_target_landmarks,

predictions=edit_predicted_landmarks)

optimizer = tf.train.AdamOptimizer(learning_rate=0.001,

name='ADAM_OPT').minimize(mse_loss) # <-- here does the error occur

The error message is this one (for short only some variables get listed):

ValueError: No gradients provided for any variable, check your graph >for ops that do not support gradients, between variables ["'InceptionResnetV2/Conv2d_1a_3x3/weights:0' shape=(3, 3, 3, 32) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_1a_3x3/BatchNorm/beta:0' shape=(32,) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_2a_3x3/weights:0' shape=(3, 3, 32, 32) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_2a_3x3/BatchNorm/beta:0' shape=(32,) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_2b_3x3/weights:0' shape=(3, 3, 32, 64) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_2b_3x3/BatchNorm/beta:0' shape=(64,) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_3b_1x1/weights:0' shape=(1, 1, 64, 80) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_3b_1x1/BatchNorm/beta:0' shape=(80,) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_4a_3x3/weights:0' shape=(3, 3, 80, 192) >dtype=float32_ref>", "'InceptionResnetV2/Conv2d_4a_3x3/BatchNorm/beta:0' shape=(192,) >dtype=float32_ref>", "'InceptionResnetV2/Mixed_5b/Branch_0/Conv2d_1x1/weights:0' shape=(1, 1, >192, 96) dtype=float32_ref>", "

EDIT:

I have managed to compute the gradients for the first two variables of the train list using this guide Override Tensorflow Backward-Propagation. Based on that I forgot the third parameter (which is mentioned as the d parameter in the guide) in the forward and backward propagation function which is in my case the conv layer output of the net. Nevertheless, I am getting only the first two gradients computed and all the others are missing. Do I have to compute and return in the backpropagation function for every trainable variable the gradient?. When I am right in the backpropagation function we are computing the derivatives with respect to the ops inputs, which are in my case 2 variables (target and predicted) and one for the conv layer output (i.e. return grad * op.inputs[0], grad * op.inputs[1], grad * op.inputs[2]). I thought that the overall computation for all trainable variables gets done after defining the custom gradient computation and while applying the opt.compute_gradient function using as a second parameter the variable list. Am I right or wrong?.

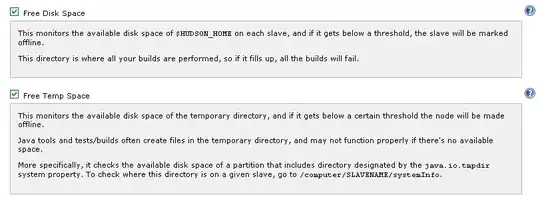

I have posted the part of the TensorBoard output for the mean_squared_error op. The image shows the additional loss function which I had left out to simplify my problem. This loss function works well. The arrow from the mean_squared_error function to the gradient computation is missing, because of the issue. I hope this gives a better overview.