I have a huge dataset (about 8.5M records) on a ".csv" file (it uses pipes instead of commas), I got no idea what is its encoding, since I live in Mexico and has accents (á é...) I assume its either latin or iso-8859-1.

When I try to import the file to a DataFrame using pandas

bmc=pd.read_csv('file.csv', sep='|',

error_bad_lines=False, encoding='iso-8859-1')

It reads nothing:

ÿþF Unnamed: 1 Unnamed: 2 Unnamed: 3 Unnamed: 4

0 NaN NaN NaN NaN NaN

1 NaN NaN NaN NaN NaN

2 NaN NaN NaN NaN NaN

If I don't place iso-8859-1 or latin, I got the error:

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xff in position 0: invalid start byte

So, to encode the file to utf-8, I open the file in Notepad++ which can read huge files, I mannualy delete the ÿþ at the start of the file, then change the encoding to utf-8 and save as a new file.

Notepad++ says the file encoding is: UCS-2 LE BOM

The filesize goes from 1.8Mb to about 0.9Mb, now I can open this file with pandas without problem.

So I think converting to utf-8 should be part of my preprocessing.

I used this solution: How to convert a file to utf-8 in Python? and created a function to convert several files:

BLOCKSIZE = 1048576 # or some other, desired size in bytes

def convert_utf8(sourceFileName, targetFileName, sourceEncoding='iso-8859-1'):

with codecs.open(sourceFileName, "r", sourceEncoding) as sourceFile:

with codecs.open(targetFileName, "w", "utf-8") as targetFile:

while True:

contents = sourceFile.read(BLOCKSIZE)

if not contents:

break

targetFile.write(contents)

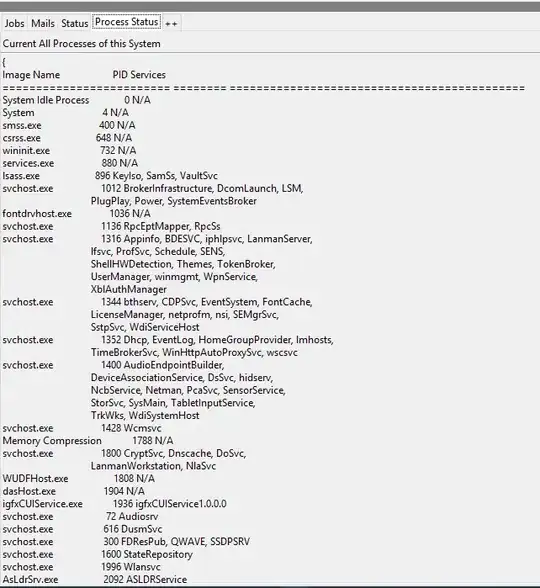

Now, the problem is, that when the file is written it adds a NULL character after every valid character, let me show it in the editor:

This file, of course, doesn't work in Pandas. So far, I have solved my problem using Notepad++, but of course there must be a better way, a way that I don't have to rely on other tools.