As an aside.. you can extract the author contact e-mails (which are same as for click) from json like string in one of the scripts

from selenium import webdriver

import json

d = webdriver.Chrome()

d.get('https://www.sciencedirect.com/science/article/pii/S1001841718305011#!')

script = d.find_element_by_css_selector('script[data-iso-key]').get_attribute('innerHTML')

script = script.replace(':false',':"false"').replace(':true',':"true"')

data = json.loads(script)

authors = data['authors']['content'][0]['$$']

emails = [author['$$'][3]['$']['href'].replace('mailto:','') for author in authors if len(author['$$']) == 4]

print(emails)

d.quit()

You can also use requests to get all the recommendations info

import requests

headers = {

'User-Agent' : 'Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36'

}

data = requests.get('https://www.sciencedirect.com/sdfe/arp/pii/S1001841718305011/recommendations?creditCardPurchaseAllowed=true&preventTransactionalAccess=false&preventDocumentDelivery=true', headers = headers).json()

print(data)

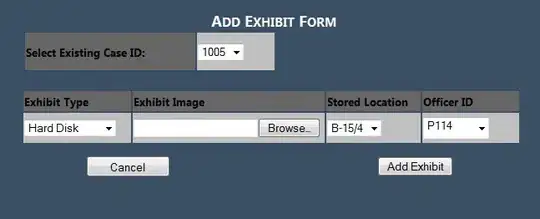

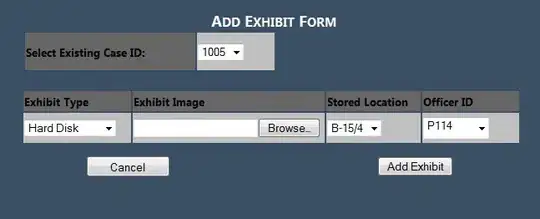

Sample view: