I've investigated the Keras example for custom loss layer demonstrated by a Variational Autoencoder (VAE). They have only one loss-layer in the example while the VAE's objective consists out of two different parts: Reconstruction and KL-Divergence. However, I'd like to plot/visualize how these two parts evolve during training and split the single custom loss into two loss-layer:

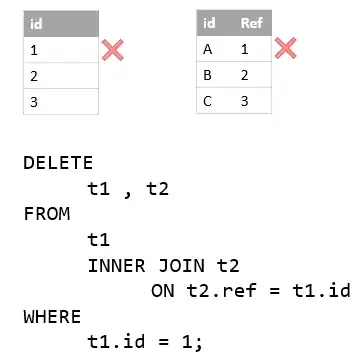

Keras Example Model:

My Model:

Unfortunately, Keras just outputs one single loss value in the for my multi-loss example as can be seen in my Jupyter Notebook example where I've implemented both approaches.

Does someone know how to get the values per loss which were added by add_loss?

And additionally, how does Keras calculate the single loss value, given multiple add_loss calls (Mean/Sum/...?)?