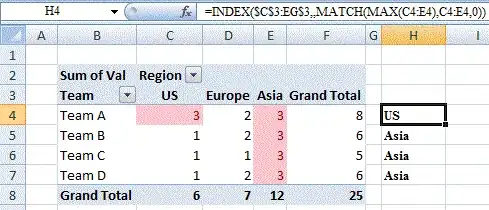

title might be seen a bit vague, here is the details of my problem. I have a web application which consists of 3 layers, an angular front-end project, .NET Core api gateway middleware project and .NET project as back-end layer. Those all 3 projects are seperate from each other and work fine. My problem is that at most of the endpoints that I have in my project, requests get completed as expected in ms levels and returns data but a few of them seems to be waited an irrefutable time and this happens randomly. Chrome response timing output for the mentioned GET request is shown below.

Chrome timing details

As you can see above, most of the time spent here is for waiting. Also, I tracked this kinda requests in the stackify, and it seems that request is completed in ms levels in the back-end project. Stackify output is shown below.

As shown above my back- end response is at longest took 835ms and returned appropiate response. Lastly, from the logs that my gateway produces in Kibana, i had the following timing log that also shows that request had taken ~8 seconds.

To sum up the issue, the response is waited at my api gateway project (.NET Core) and I have no clue why this is happening randomly at some endpoints. Lastly, I just send request to the related end-points in my api gateway project and no operations is made. To understand and fix this issue, any help and suggestion is appreciated in advance.