I have already resolved several others using Application.Index with Application.WorksheetFunction.Match and reduced time to perform from about 7-8 seconds to milliseconds. But I feel there is still room for improvement.

Should I use an array with Index and Match?

I was also told to use Scripting.Dictionary, but I am looking for someone who can demonstrate how to do it the right way in this scenario. Because in my head I have to populate the dictionary with a loop before I can even use that, so wont it be similar in terms of speed?

'Production Quantity for Dashboard

For i = 2 To Total_rows_Prod

For j = 2 To Total_rows_Dash

If ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 5) = ThisWorkbook.Worksheets("Dashboard").Cells(j, 1) Then

ThisWorkbook.Worksheets("Dashboard").Cells(j, 4) = ThisWorkbook.Worksheets("Dashboard").Cells(j, 4) + ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 31) / ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 4)

End If

Next j

Next i

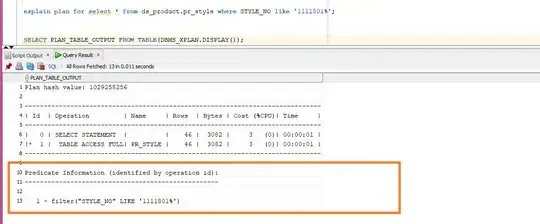

After doing some bottleneck testing, using a nested for-next loop is known to be extremely slow and bad practice as shown below (run time of code is shown at row 10):

However, when using Index and Match while only using 1 for-next loop as shown in the code below:

'Production Quantity for Dashboard

For i = 2 To Total_rows_Prod

m = Application.Match(ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 5), ThisWorkbook.Worksheets("Dashboard").Range("A:A"), 0)

If Not IsError(m) Then

ThisWorkbook.Worksheets("Dashboard").Cells(Application.WorksheetFunction.Match(ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 5), ThisWorkbook.Worksheets("Dashboard").Range("A:A"), 0), 4) = ThisWorkbook.Worksheets("Dashboard").Cells(Application.WorksheetFunction.Match(ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 5), ThisWorkbook.Worksheets("Dashboard").Range("A:A"), 0), 4) + ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 31) / ThisWorkbook.Worksheets("Prod. Qty.").Cells(i, 4)

End If

Next i

The run time would be negligible as shown below (still at row 10):

The final time (as of 1/21/19) I was able to make everything run with Index and Match replacements was 2 seconds:

On a slower netbook running a Pentium Atom processor, it takes 26 seconds to perform the same code (13 times longer). So I am wondering if there is way to bring down that 26 seconds.

Any improvements that would make the time to perform optimally minimized would be great. Hopefully making it the fastest possible.

Update as of 2/23/19 (Code Revision):

Dim rangeProdQtySum As Range, rangeProdQtyPass As Range

Set rangeProdQtySum = ThisWorkbook.Worksheets("Prod. Qty.").Range("AE1:AE" & Total_rows_Prod)

Set rangeProdQtyPass = ThisWorkbook.Worksheets("Prod. Qty.").Range("D1:D" & Total_rows_Prod)

ReDim arrProdQtySum(1 To Total_rows_Prod) As Variant

ReDim arrProdQtyPass(1 To Total_rows_Prod) As Variant

arrProdQtySum = rangeProdQtySum

arrProdQtyPass = rangeProdQtyPass

ReDim arrProdQtyDash(1 To Total_rows_Dash) As Variant

'Production Quantity for Dashboard

For i = 2 To Total_rows_Prod

m = Application.Match(arrProdQtyJTJN(i, 1), Application.Index(Application.WorksheetFunction.Transpose(arrDashInfo), 0, 1), 0)

If Not IsError(m) Then

arrProdQtyDash(Application.Match(arrProdQtyJTJN(i, 1), Application.Index(Application.WorksheetFunction.Transpose(arrDashInfo), 0, 1), 0)) = arrProdQtyDash(Application.Match(arrProdQtyJTJN(i, 1), Application.Index(Application.WorksheetFunction.Transpose(arrDashInfo), 0, 1), 0)) + arrProdQtySum(i, 1) / arrProdQtyPass(i, 1)

End If

Next i

arrProdQtyDash(1) = "Production Quantity"

ThisWorkbook.Worksheets("Dashboard").Range("D1:D" & Total_rows_Dash) = Application.WorksheetFunction.Transpose(arrProdQtyDash)

With arrays, minimizing usage of for-next loops, use index and match with combinations to arrays, and memorization of variables (assigning references to a variable), the timer are as follows:

The same set of codes that I tried on the slower pentium atom computer takes 10 seconds to perform the same code as shown below:

This is about 2.6x faster than simply using the index and match codes. I am wondering if there are any more improvements to be suggested for the code snippet displayed here because it is the bottleneck (takes 5 seconds to perform on the pentium atom or about 50% of the processing time).