I was training an LSTM network in tensorflow. My model has the following configuration:

- time_steps = 1700

- Cell size: 120

- Number of input features x = 512.

- Batch size: 34

- Optimizer: AdamOptimizer with learning rate = 0.01

- Number of epochs = 20

I have GTX 1080 Ti. And my tensorflow version is 1.8.

Additionally, I have set the random seed through tf.set_random_seed(mseed), and I have set the random seed for every trainable variable's initializer so that I can reproduce the same results after multiple runs.

After training the model multiple times, every time for 20 epochs, I found that I was achieving the same exact loss for the first several epochs (7, 8 or 9) "during each run", and then the loss start to differ. I was wondering why this is occuring; and if possible how can someone totally reproduce the results of any model.

Additionally, In my case I feeding the whole data during every iteration. That is, I have doing back propagation through time (BPTT) and not truncated BPTT. In other words, I have 2 iterations in total which is equal to the number of epochs as well.

The following figure demonstrate my problem. Please note that every row correspond to one epoch.

Please note that each column correspond to a different run. (I only included 2 columns/runs) to demonstrate my point.

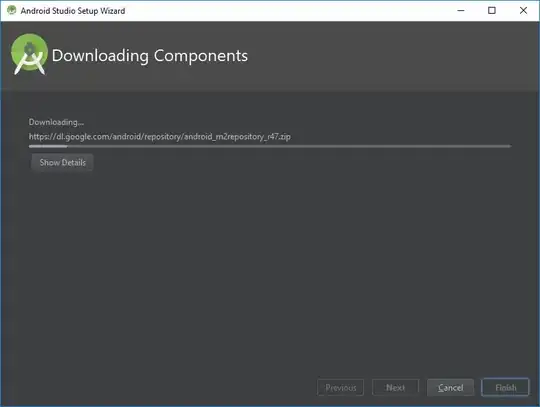

Finally, replacing the input features with a new features of dimensions 100, I get better results as shown in the following image:

Therefore, I am not sure if this is a hardware issue or not?

Any help is much appreciated!!