We are writing a performance critical application, which has 3 main parameters: N_steps (about 10000) N_nodes (anything from 20 to 5000) N_size (range about 1k-10k)

The algorithm has essentially this form

for (int i=0; i<N_steps; i++)

{

serial_function(i);

parallel_function(i,N_nodes);

}

where

parallel_function(i,N_nodes) {

#pragma omp parallel for schedule (static) num_threads(threadNum)

for (int j=0; j<N_nodes j++)

{

Local_parallel_function(i,j) //complexity proportional to N_size

}

}

and Local_parallel_function is a function performing linear algebra and it typically has a run time of about 0.01-0.04 seconds or even more, and this execution time should be pretty stable within the loop. Unfortunately the problem is sequential in nature, so I cannot write the outer loop differently.

I have noticed while profiling that a huge deal of time is spent in the NtYieldExecution function (up to 20%, if I use HT on 4 cores).

I did some test playing with the parameters and I found out that this percentage:

Increases with the number of threads

Decreases as N_nodes and N_size increase.

Most likely for OpenMP the parallel loop is currently not large enough, and making it larger or the function more computationally expensive helps in reducing this overhead.

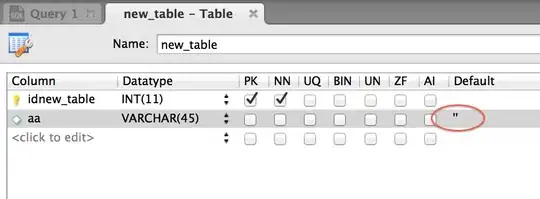

To try to have a better insight, I downloaded the Intel Profiler, and I obtained the following results:

The region in red are the spin times, and the threads on top are the one spawned by OpenMP.

Any suggestion on how to manage and reduce this effect?

I use windows 10, Visual Studio 15.9.5 and OpenMP. Unfortunately it seems that the Intel Compiler is not able to compile one dependent library, so I am stuck with the Microsoft one.