im trying to implement some ligth effect to a sphere. the idea is that it seems to emit light in a volumetric way, in the direction of the normal vectors. but i dont know exactly how can i do it.

my fragment shader is this, i implement a “radial” blur, and it works fine but only when the camera is front facing the sphere.

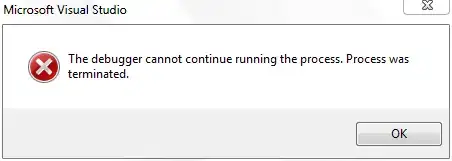

when is not, this happens;

when is not, this happens;

im trying to follow this example, but with no success:

im trying to follow this example, but with no success:

https://medium.com/@andrew_b_berg/volumetric-light-scattering-in-three-js-6e1850680a41

this is what im trying to get:

this is my code:

import controlP5.*;

ControlP5 cp5;

import peasy.*;

PGraphics canvas;

PGraphics verticalBlurPass;

PShader blurFilter;

PeasyCam cam;

void setup()

{

cam = new PeasyCam(this, 1400);

size(1000, 1000, P3D);

canvas = createGraphics(width, height, P3D);

verticalBlurPass = createGraphics(width, height, P3D);

verticalBlurPass.noSmooth();

blurFilter = loadShader("bloomFrag.glsl", "blurVert.glsl");

}

void draw(){

background(0);

canvas.beginDraw();

render(canvas);

canvas.endDraw();

// blur vertical pass

verticalBlurPass.beginDraw();

verticalBlurPass.shader(blurFilter);

verticalBlurPass.image(canvas, 0, 0);

//render(verticalBlurPass);

verticalBlurPass.endDraw();

cam.beginHUD();

image(verticalBlurPass, 0, 0);

cam.endHUD();

println(frameRate);

}

void render(PGraphics pg)

{

cam.getState().apply(pg);

pg.background(0, 50);

pg.stroke(255, 0, 0);

canvas.fill(100, 0, 255);

canvas.fill(255);

pg.noStroke();

canvas.sphere(100);

pg.noFill();

pg.stroke(255);

pg.strokeWeight(10);

}

vertex:

#version 150

in vec4 position;

in vec3 normal;

uniform mat4 transform;

in vec2 texCoord;

out vec4 TexCoord;

in vec3 color;

out vec3 Color;

uniform float u_time;

uniform mat4 texMatrix;

void main() {

Color = color;

TexCoord = texMatrix * vec4(texCoord, 1.0, 1.0);

gl_Position = transform * position ;

}

fragment:

#version 150

#define PROCESSING_TEXTURE_SHADER

#ifdef GL_ES

precision mediump float;

#endif

in vec4 TexCoord;

in vec3 Color;

uniform sampler2D texture;

uniform float u_time;

uniform float amt;

uniform float intensity;

uniform float x;

uniform float y;

uniform float noiseAmt;

uniform float u_time2;

out mediump vec4 fragColor;

vec4 finalColor;

#define PI 3.14

uniform vec2 lightPosition = vec2(0,0);

uniform float exposure = 0.09;

uniform float decay = .95;

uniform float density = 1.0;

uniform float weight = 1;

uniform int samples = 100;

const int MAX_SAMPLES = 100;

uniform vec2 resolution; // screen resolution

void main(){

vec2 texCoord = TexCoord.xy;

// Calculate vector from pixel to light source in screen space

vec2 deltaTextCoord = texCoord - lightPosition;

// Divide by number of samples and scale by control factor

deltaTextCoord *= 1.0 / float(samples) * density;

// Store initial sample

vec4 color = texture(texture, texCoord);

// set up illumination decay factor

float illuminationDecay = 1.0;

// evaluate the summation for samples number of iterations up to 100

for(int i=0; i < MAX_SAMPLES; i++){

// work around for dynamic number of loop iterations

if(i == samples){

break;

}

// step sample location along ray

texCoord -= deltaTextCoord;

// retrieve sample at new location

vec4 color2 = texture(texture, texCoord);

// apply sample attenuation scale/decay factors

color2 *= illuminationDecay * weight;

// accumulate combined color

color += color2;

// update exponential decay factor

illuminationDecay *= decay;

}

// output final color with a further scale control factor

fragColor = color * exposure;

}

any ideas? what im doing wrong?