I am using Tensorflow Object detection, with faster_rcnn_inception_v2_coco as pretrained model. I'm on Windows 10, with tensorflow-gpu 1.6 on NVIDIA GeForce GTX 1080, CUDA 9.0 and CUDNN 7.0.

I'm trying to training a multi-class object detection with a custom dataset, but I had some weird behavior. I have 2 classes: Pistol and Knife (with respectively 876 and 664 images, all with similar size from 360x200 to 640x360, and similar ratio). So, I think that the dataset is balanced. I splitted it into Train set (1386 images: 594 knife, 792 pistol) and Test set (154 images: 70 knife, 84 pistol)

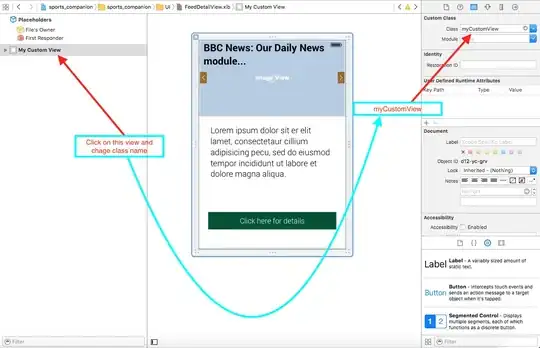

The CNN seems that can detect only one of the two object with good accuracy, and which object can detect (of the two classes) changes randomly during the training steps and in the same image (example: step 10000 it detect only pistol, step 20000 only knife, step 30000 knife, step 40000 pistol, step 50000 knife, etc..), as showen below:

Moreover, the Loss looks weird, and the accuracy during the evaluation are never high for both classes together.

During the training phase, the loss seems to oscillate at every training step.

Loss:

Total Loss:

From the mAp (image below) you can see that the two objects are never identified together at the same step:

If I trained these two classes separately, I can achieve a good 50-60% accuracy. If I train these two classes together, the results is what you have seen.

Here you can find the generate_tfrecord.py and the model configuration file (that I changed to made it multi-class). The label map isthe following:

item {

id: 1

name: 'knife'

}

item {

id: 2

name: 'pistola'

}

Any suggest are welcome.

UPDATES After 600k iterations, loss is still oscillating. The scenario is the following: Loss, Total Loss, and mAp.

![Weird behavior in evaluation][3]](../../images/3815640159.webp)