I was indeed making the mistake to not flatten the arrays which in return produced the mistake. Thank you for the fast response STJ!

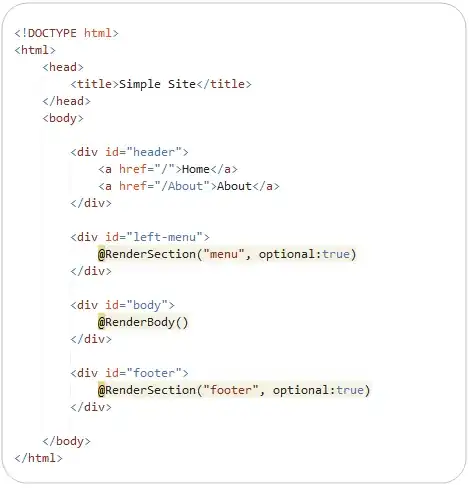

Here is an example of the working code:

# Generate data

bound = 3.

x1 = np.linspace(-bound, bound, num)

x2 = np.linspace(-bound, bound, num)

x1_mesh,x2_mesh = np.meshgrid(x1, x2)

X = np.dstack([x1_mesh, x2_mesh]).reshape(-1, 2)

z = f(x1_mesh, x2_mesh) # evaluation of the function on the grid

# Draw samples from feature vectors and function by a given index

size = 2

np.random.seed(1991)

index = np.random.choice(range(len(x1)), size=(size,X.ndim), replace=False)

samples = utils.sampleFeature([x1,x2], index)

X1_sample = samples[0]

X2_sample = samples[1]

X_sample = np.column_stack((X1_sample, X2_sample))

Y_sample = utils.samplefromFunc(f=z, ind=index)

# Change noise parameter

sigma_n = 0.0

# Construct models with initial guess

k = gpflow.kernels.RBF(2,active_dims=[0,1], lengthscales=1.0,ARD=True)

m = gpflow.models.GPR(X_sample, Y_sample, kern=k)

m.likelihood.variance = sigma_n

m.compile()

#print(X.shape)

mean, var = m.predict_y(X)

mean_square = mean.reshape(x1_mesh.shape) # Shape: (num,num)

var_square = var.reshape(x1_mesh.shape) # Shape: (num,num)

# Plot mean

fig = plt.figure(figsize=(16, 12))

ax = plt.axes(projection='3d')

ax.plot_surface(x1_mesh, x2_mesh, mean_square, cmap=cm.viridis, linewidth=0.5, antialiased=True, alpha=0.8)

cbar = ax.contourf(x1_mesh, x2_mesh, mean_square, zdir='z', offset=offset, cmap=cm.viridis, antialiased=True)

ax.scatter3D(X1_sample, X2_sample, offset, marker='o',edgecolors='k', color='r', s=150)

fig.colorbar(cbar)

for t in ax.zaxis.get_major_ticks(): t.label.set_fontsize(fontsize_ticks)

ax.set_title("$\mu(x_1,x_2)$", fontsize=fontsize_title)

ax.set_xlabel("\n$x_1$", fontsize=fontsize_label)

ax.set_ylabel("\n$x_2$", fontsize=fontsize_label)

ax.set_zlabel('\n\n$\mu(x_1,x_2)$', fontsize=fontsize_label)

plt.xticks(fontsize=fontsize_ticks)

plt.yticks(fontsize=fontsize_ticks)

plt.xlim(left=-bound, right=bound)

plt.ylim(bottom=-bound, top=bound)

ax.set_zlim3d(offset,np.max(z))

which leads to (red dots are the sample points drawn from the function). Note: Code not refactored what so ever :)